Data safety and security should be paramount for all organizations developing or using artificial intelligence. Recent research reveals that in the past year alone, over 90% of surveyed companies experienced a breach related to generative AI.

Brand leaders face a growing list of AI safety risks, including AI-specific attacks like prompt injections and more traditional cybersecurity concerns like social engineering and supply chain vulnerabilities that could expose artificial intelligence applications.

This blog highlights five AI data safety tools and best practices to help organizations mitigate the most significant threats to data security and privacy.

AI data safety tools and best practices

While this guide is dedicated primarily to mitigating risks for companies using AI-powered applications with their own data, AI data safety really starts with the organizations that build and train AI models and develop the aforementioned applications. The best practice is to shift-left by building security into every stage of AI training and development.

For more information about building safer AI, download our whitepaper, Achieving AI Security: Opportunities for CIOs, CISOs, and CDAOs.

| AI Data Safety Tools and Best Practices | ||

|---|---|---|

| Tactic | Description | Example Tools |

| Data minimization | Remove sensitive and unnecessary information from data used in training, fine-tuning, and retrieval-augmented generation (RAG), as well as from user prompts and model outputs | Granica Screen, Private AI |

| Bias and toxicity detection | Detect discrimination, profanity, and violent language in training and RAG data, inputs, and outputs to ensure fairness and inclusivity in model decision-making | Granica Screen, Garak |

| Security and penetration testing | Evaluate AI applications for cybersecurity risks and validate their ability to withstand common AI attacks that could result in data leakage | Purple Llama, Garak |

| Threat detection | Analyze prompts and outputs for possible threats to data security, such as prompt injections and jailbreaks | Vigil, Rebuff |

| Differential privacy | Add a layer of noise to AI datasets to effectively sanitize any sensitive information contained within training and inference data | PrivateSQL, NVIDIA FLARE |

Data minimization

Data minimization is the practice of storing only the information required for accurate inference (decision-making) when using an AI model and removing all other unnecessary data. It can be applied at the training and fine-tuning stage, during retrieval-augmented generation (supplementing training data with external sources), on inputs from end-users, on prompt-engineering applications, and even on outputs before they reach the end-user.

Data minimization reduces the risk that data leaks will contain sensitive information. The practice is required by many data privacy regulations, such as the EU’s GDPR (General Data Protection Regulation) and the US’s HIPAA (Health Insurance Portability and Accountability Act).

Data minimization tools include sensitive data discovery and masking solutions like Granica Screen that help companies detect PII (personally identifiable information) and other private information in training/fine-tuning data, LLM prompts, and RAG inferences. Users can either remove this information entirely or mask it to employ the context for training.

Bias and toxicity detection

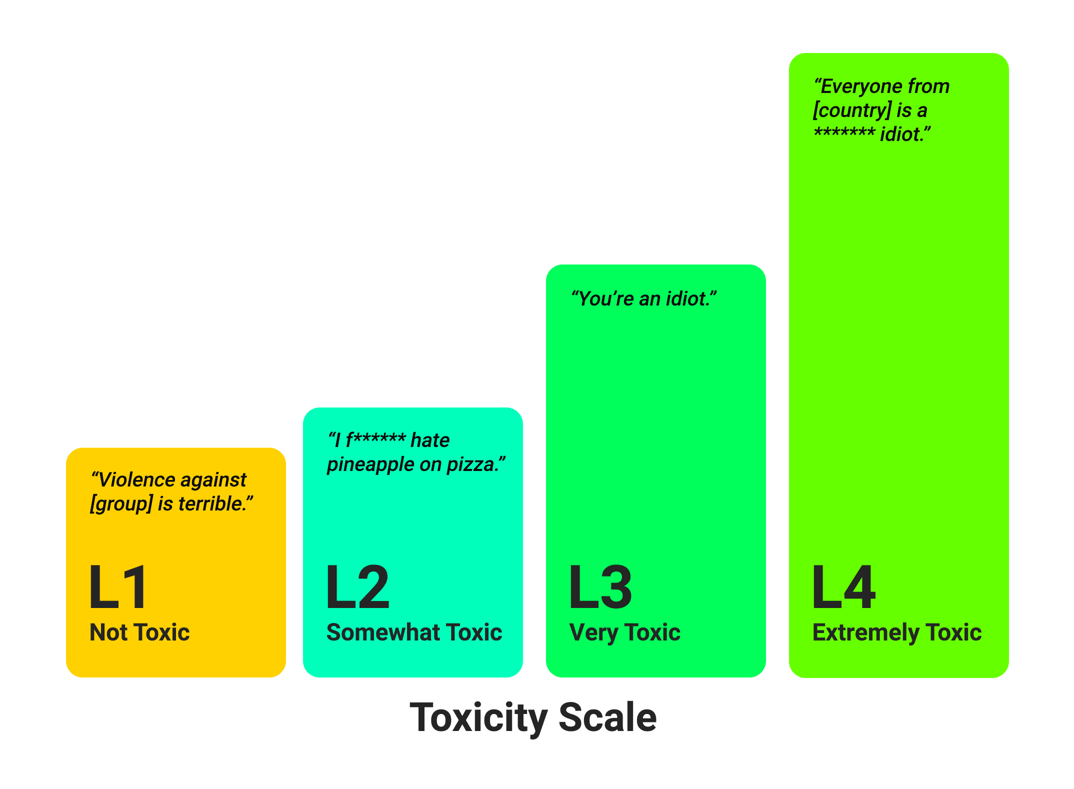

Another important aspect of AI data safety is how information used for fine-tuning could potentially harm consumers and third parties. The best practice is to integrate ethical mitigations into the fine-tuning stage to help ensure the fairness and inclusivity of datasets, avoid biased decision-making, and prevent harmful content in outputs.

Bias and toxicity detection tools can help identify discrimination, profanity, and violent language in training/fine-tuning data and inferences. A solution like Granica Screen can identify problematic content with different levels of severity to help streamline the mitigation process.

| Toxicity and Bias Taxonomy By Model | |

|---|---|

| Model | Taxonomy |

| Granica | Toxicity categories:

Bias categories:

|

| Meta Llama Guard 1 7B |

|

| Meta Llama Guard 3 8B |

|

| Nvidia Aegis |

|

| OpenAI text-moderation-stable |

|

| OpenAi omni-moderation-2024-09-26 |

|

| Mistral mistral-moderation-latest |

|

| Perspective API |

|

Security and penetration testing

Security and penetration testing involves probing an AI solution for weak spots and validating its ability to withstand common AI threats like data inference, prompt injections, and data linkage. Tools like Garak can help develop safer AI applications and periodically test for vulnerabilities in a production environment.

Threat detection

Within an AI context, threat detection involves continuously monitoring datasets, prompts, and outputs for threats like prompt injections and jailbreaks to prevent breaches. Threat detection tools, also known as AI firewalls, use technology like natural language processing (NLP) to automatically determine the difference between safe and unsafe content.

| AI Data Threats Detected by AI Firewalls | |

|---|---|

| Threat | Description |

| Prompt injection | Inserting malicious content into prompts to manipulate the model into revealing sensitive information |

| Jailbreak | Manipulating an AI to bypass safety guardrails or mitigations to extract sensitive data |

| Poisoning | Intentionally contaminating an AI dataset to negatively affect AI performance or behavior |

| Inference | Probing an AI solution for enough PII-adjacent information to infer identifiable information about individuals |

| Data linkage | Combining semi-anonymized data outputs from an AI model with other publicly available or stolen information to re-identify an individual |

| Extraction | Probing an AI application to reveal enough information for an attacker to infer some of the model training data |

Differential privacy

Differential privacy is an AI data safety technique that involves adding a layer of noise to AI datasets, effectively anonymizing any sensitive information contained within. It’s most often used to protect training data for the foundation model, but differential privacy tools like PrivateSQL can also protect inference data.

Improve AI data safety at every stage with Granica

Granica Screen is a data privacy solution that helps organizations develop and use AI safely. The Screen “Safe Room for AI” protects tabular and NLP data and models during training, fine-tuning, inference, and RAG. It detects sensitive and unwanted data like PII, bias, and toxicity with state-of-the-art accuracy, using masking techniques like synthetic data generation to ensure safe and effective use with LLMs and generative AI. Granica Screen helps organizations shift-left with data safety, using an API to integrate directly into the data pipelines that support data science and machine learning (DSML) workflows.

Schedule a demo to see the Granica Screen AI data safety tool in action.