As a company’s data assets grow to support initiatives like data science, machine learning (ML), and artificial intelligence (AI), so too do the costs associated with storing and moving that data. Many organizations use cloud-based data lakes and lakehouses to help mitigate infrastructure and maintenance costs. These lakes and lakehouses are built on top of cloud object storage solutions but these solutions often charge hidden fees that can add up over time and offset any potential savings.

Of these hidden fees, some of the most significant are cloud data transfer costs. Cloud providers almost universally allow you to transfer data into their platform for free, but they charge per gigabyte for any data transferred (or copied) out. Data transfer fees make it more expensive to integrate cloud data stores with AI/ML and analytics pipelines in other clouds, or even in regions within the same provider, often catching businesses off-guard and preventing them from meeting ROI goals.

This post breaks down some of the average cloud data transfer costs and offers some tips and solutions for keeping these expenses in check.

Granica Crunch is a data compression service for cloud data lakes and lakehouses that helps control storage and transfer expenses. Check out our cloud cost savings calculator to estimate how much Crunch can save you.

How to estimate cloud data transfer costs

Each cloud provider may use slightly different methods to calculate data transfer fees, but they all share some similarities. Providers typically use sensors to measure data transfer by customer and service. The major vendors allow a certain amount of data to transfer out for free, but once customers pass a specific threshold, they start adding charges. data transfer fees are usually added to the monthly bill rather than charged immediately.

It’s important to note that AWS, Google Cloud, and Microsoft Azure all announced earlier this year that they would waive data transfer fees for customers leaving their platform for another service. However, most customers are more concerned with the costs of replicating data to third-party services and pipelines, which have not been eliminated. Examples of cloud data transfer activities that still incur charges include:

- Data transfers from a cloud platform to the public internet

- Data replication between a provider’s available regions

- Data replication between different cloud services offered by the same provider

Data transfer pricing can get complex because it varies by data volume, service, and transfer destination. The table below provides some basic cost information for data transfer from a cloud platform to an external service.

Pricing data pulled from provider websites

| Cloud Data Transfer Costs by Provider | ||

|---|---|---|

| Cloud Provider | Free Transfer Threshold | Standard data transfer Fees |

| Amazon Web Services (AWS) EC2 | 100 GB |

|

| Google Cloud | 200 GB | Varies depending on the source region of the traffic. For Northern Virginia:

|

| Microsoft Azure | 100 GB | Varies depending on the source region of the traffic. For North America and Europe:

|

How to reduce cloud data transfer costs

The complicated structure of cloud egress pricing can make it difficult to control costs, but the following strategies and technologies can help.

Cloud cost allocation

It’s hard to estimate or reduce data transfer costs without knowing how much data is leaving the cloud environment each month. Many platforms allow companies to tag specific cost generators, including data transfer, which makes it easier to track utilization over time. In addition, some third-party cloud cost management tools provide enhanced capabilities such as the ability to unify cost allocation dashboards across multiple cloud platforms.

Efficient data architectures

The design of a company’s data infrastructure can greatly affect egress costs. Storing data in the same platform, service, and region as the applications using that data can minimize transfer costs. Implementing data caching mechanisms such as a content delivery network (CDN) can also help control egress expenses, although some providers charge extra fees for CDN usage.

Cloud data compression

Lossless data compression shrinks the physical size of files in cloud data lakes and lakehouses to lower costs for both at-rest storage and data transfer. A service like Granica Crunch continuously monitors cloud data storage environments and optimizes the compression of lakehouse data formats such as Apache Parquet to control costs and improve the speed of transfers, replication, and processing.

How Granica Crunch shrinks cloud data costs and improves ROI

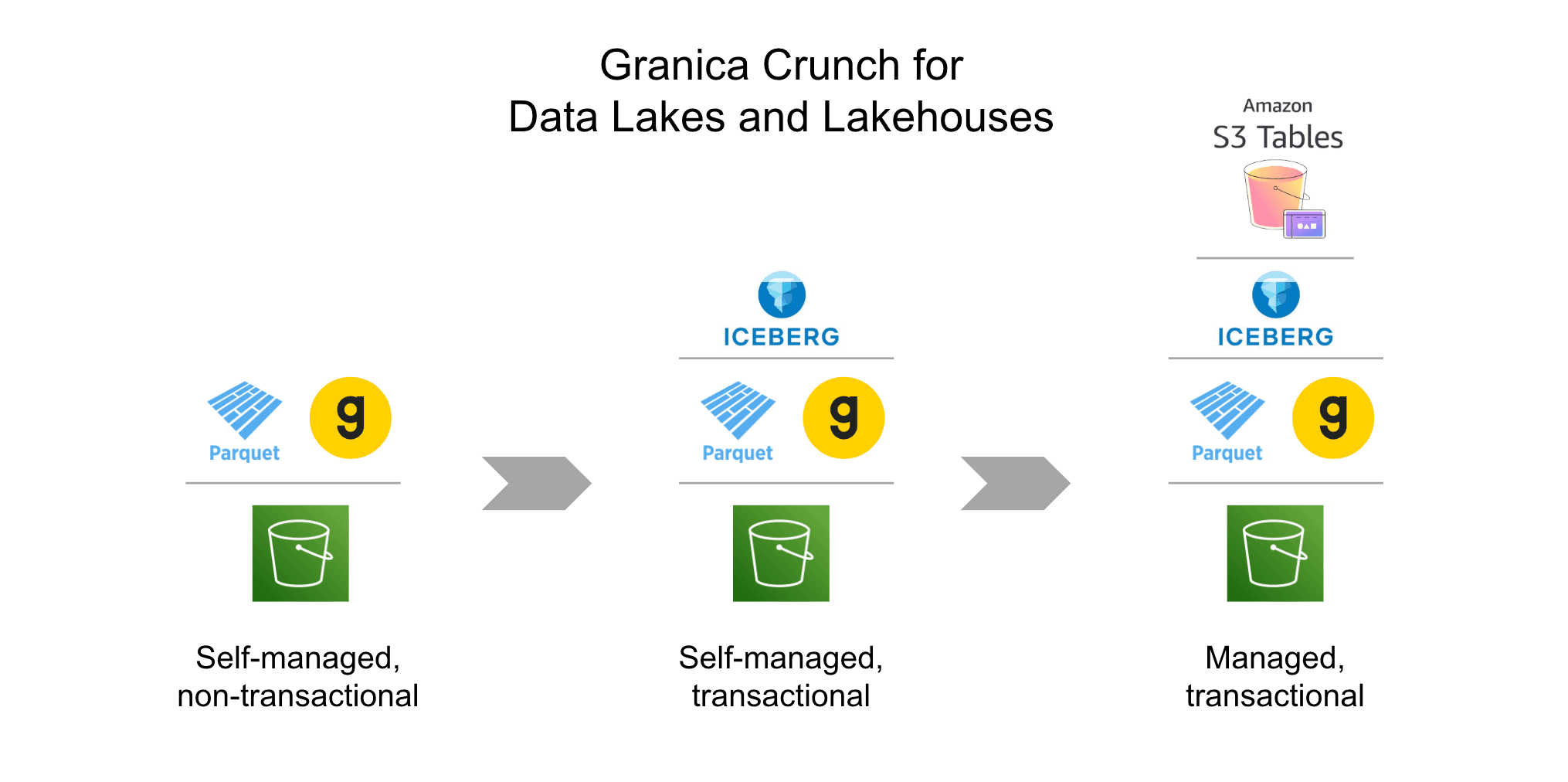

Granica Crunch is the world’s first solution for managing cloud data lake and lakehouse costs. The Granica AI data platform is deployed inside customers’ cloud environments where it analyzes the existing lakehouse Parquet files, and then optimizes the compression of those files to further reduce their physical size. Granica Crunch uses a proprietary compression control system that leverages the columnar nature of modern analytics formats like Parquet and ORC to achieve high compression ratios through underlying OSS compression algorithms such as zstd. The resulting compact files remain in standards-based Parquet format, readable by Parquet-based applications without requiring Granica for decompression.

The compression control system combines techniques like run-length encoding, dictionary encoding, and delta encoding to optimally compress data on a per-column basis. Crunch can reduce cloud data transfer costs by up to 60% for large-scale analytical, AI, and ML data sets. The smaller, more compact files also reduce at-rest storage costs by the same %, leading to significant savings for data intensive environments.

Explore an interactive demo of Granica Crunch to see its compression optimization and cost-saving capabilities in action!