We are excited to announce that Granica Screen, our AI Data Safety solution, is now available on AWS Marketplace, empowering enterprises to safely and efficiently build and deploy AI. AWS customers can now easily access Granica Screen to detect sensitive and harmful data, boost model performance with synthetic data, and protect LLMs during runtime - all directly from AWS Marketplace.

In today’s landscape, deploying AI safely, ethically, and in compliance with regulations is more critical than ever. Screen is addressing a key gap for data leaders by enabling smarter data management that drives real business impact - not just theoretical value. By partnering with AWS, Granica makes it easier for organizations building on AWS infrastructure to prioritize data safety when building and deploying AI.

Benefits for AWS customers

-

Seamless Integration and Simplified Billing AWS Marketplace streamlines access to Granica Screen, consolidating billing for cloud services into a single invoice. Customers can leverage existing AWS cloud commitments, simplifying procurement and potentially reducing costs while ensuring seamless integration of data safety into their AI pipelines.

-

State-of-the-Art Detection Accuracy Screen’s unmatched precision and recall for both structured data and free text, typical formats for AI training and fine-tuning datasets, ensures no exposures or vulnerabilities go unnoticed in S3 buckets or lakehouse tables.

-

Synthetic Data Generation High-quality, realistic synthetic data can be used to augment existing training and fine-tuning datasets, minimizing the risks of sensitive information leaks and ensuring access to high-quality data for data and AI teams.

-

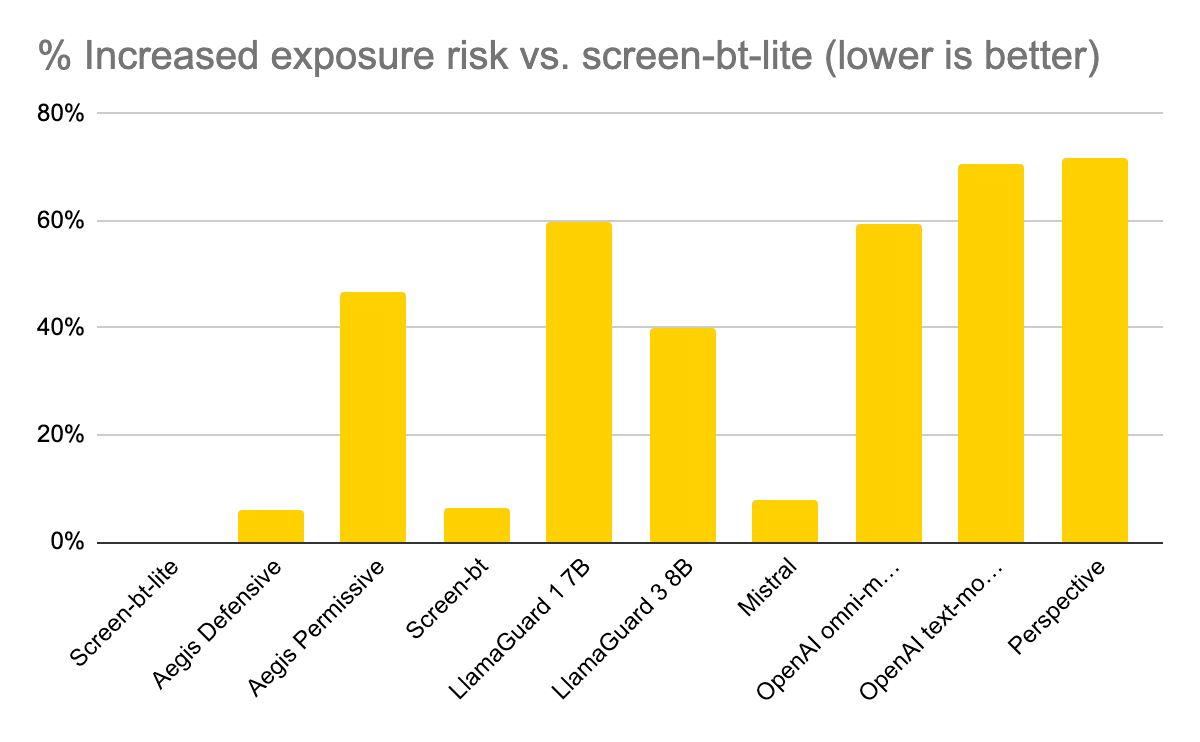

Training Data Detoxification Screen scans for various forms of bias including protected and polarization attributes, as well as toxicity categories such as hate, violence, and profanity. Toxicity scores evaluate and quantify the level of harmful content within a given dataset to identify and address toxic content before it influences model training. Training data detoxification is available via early access program for select customers.

-

LLM Runtime Protection Screen’s runtime protection dynamically masks sensitive and toxic content in LLM prompts and RAG-based applications with low-latency, safeguarding both model inputs and outputs, while maintaining contextual relevance. Runtime toxic content detection is available via early access program for select customers.

The Granica and AWS Partnership allows AWS customers to maximize the full value of their data and ensure their AI initiatives remain safe, ethical, and compliant.

See Screen in action

Ready to build safety and trust into your data, AI models and workflows in AWS? Request a demo today.