Large language models (LLMs) are the foundational artificial intelligence technology that powers generative AI and other language-based AI tools. They’re built into applications that companies use to write software code, provide automated customer service chatbots, generate marketing content, and much more. An LLM’s human-like language capabilities and lightning-fast inferences can help organizations across sectors boost productivity and revenue, which is why there’s been such an explosion in their adoption in recent months.

The surging popularity of LLMs and other AI/ML technologies, and the increasing reliance on their decision-making skills, have unearthed significant and many-faceted concerns regarding security and safety. These include:

- How consumer data – particularly sensitive or personally identifiable information (PII) – is collected and used by an LLM.

- The fairness and inclusivity inherent in training datasets, and how to avoid biased inference (decision-making) by an LLM.

- The security of models and their supporting infrastructure, including their ability to withstand tampering (which could affect inference fairness and accuracy) or data extraction (which could expose sensitive consumer information).

- The potential for LLMs to generate illegal, violent, or otherwise harmful content in outputs to end-users.

- Ensuring that security and ethical safeguards do not negatively affect model performance and accuracy so its inferences are still trustworthy and provide business value.

The best way to mitigate these concerns is with a multi-layered security strategy that’s targeted to your use case and environment. This post describes some of the best LLM security tools to integrate into your strategy.

For a more comprehensive guide to generative AI safety, download our AI Security Whitepaper.

What do LLM security tools do?

The robust marketplace of LLM security tools offers various solutions for targeting different phases of development and usage. Some are designed to strengthen models during the initial building and training stages, helping companies shift-left by incorporating security into an LLM from the ground up. Others work at inference time, which means they’re used to evaluate or improve model security while it receives inputs and generates outputs, and as such are deployed for pre-release testing and in production. Some of the most important capabilities these tools feature include:

- Data minimization - Removes unnecessary and sensitive information from data used in training and retrieval-augmented generation (RAG) as well as from user prompts and outputs. Data minimization tools provide automatic sensitive data discovery and masking. Examples: Granica Screen, Private AI

- Security & penetration testing - Evaluates LLM solutions for cybersecurity risks and tests their ability to withstand common AI attacks like prompt injections. Security and pen testing tools help companies develop safer LLM applications, and can also be used by customers in production environments. Examples: Purple Llama, Garak

- Threat detection - Analyzes prompts and outputs for possible threats, such as prompt injections and jailbreaks to prevent data leakage or model compromise. Examples: Purple Llama, Garak, Vigil, Rebuff

- Bias & toxicity detection - Detects discrimination, profanity, and violent language in training data, RAG data, inputs, and outputs to ensure the fairness and inclusivity of decision-making. Examples: Granica Screen, Purple Llama, Garak, PrivateSQL

- Differential privacy - Adds a layer of noise to LLM datasets to effectively sanitize any sensitive information contained within. Differential privacy can protect both training data and inference data. Example: PrivateSQL, NVIDIA FLARE

- Adversarial training - Exposes an LLM to numerous examples of inputs that have been modified in ways the model might encounter in real attacks and teaches it how to respond appropriately. Adversarial training typically occurs in development but could also be used on production models. Example: Adversarial Robustness Toolkit, Microsoft Counterfit

- Federated learning - Trains an LLM on data compartmentalized across multiple decentralized computers that can’t share raw data. Federated learning happens in the initial training stages. Example: NVIDIA FLARE, Flower

Comparing the top LLM security tools

| LLM Security Tool | Capabilities |

|---|---|

| 1. Granica Screen |

• Sensitive data discovery & masking • Bias & toxicity detection • Large-scale data lake privacy • Real-time LLM prompt privacy • AI training data visibility • Cloud cost optimization |

| 1. Purple Llama |

• Security & penetration testing • Threat detection • Bias & toxicity detection |

| 1. Garak |

• Security & penetration testing • Threat detection • Bias & toxicity detection |

| 1. Vigil |

• Threat detection |

| 1. Rebuff |

• Threat detection |

| 1. Private AI |

• Sensitive data discovery & masking |

| 1. PrivateSQL |

• Differential privacy • Bias & toxicity detection |

| 1. Adversarial Robustness Toolbox |

• Adversarial training |

| 1. NVIDIA FLARE |

• Federated learning • Differential privacy |

| 1. Flower |

• Federated learning |

| 1. Microsoft Counterfit |

• Adversarial training |

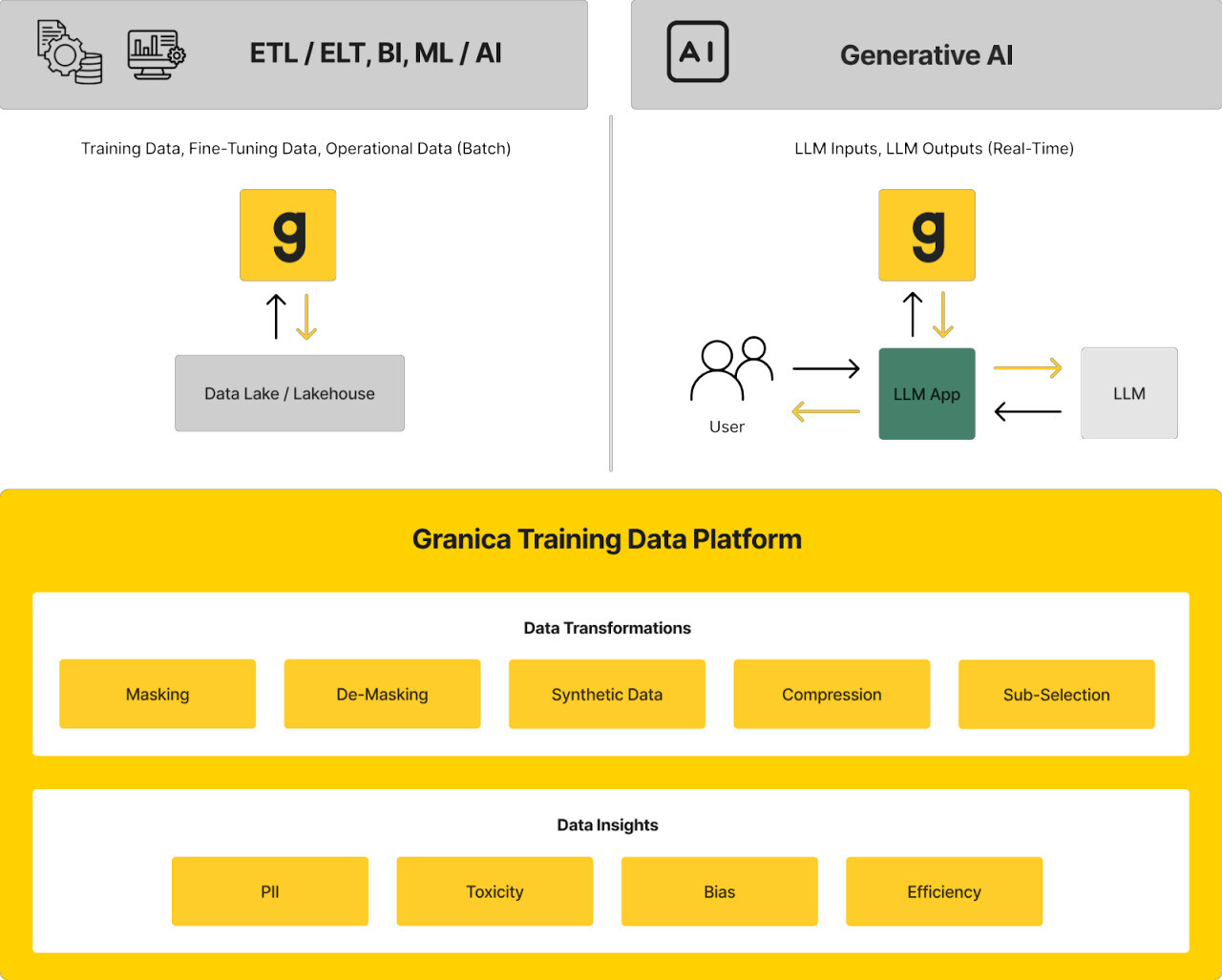

1. Granica Screen

Granica Screen is a privacy and safety solution that helps organizations develop and use AI ethically. The Screen “Safe Room for AI” protects tabular and natural language processing (NLP) data and models during training, fine-tuning, inference, and RAG. It detects sensitive and unwanted data like personally identifiable information (PII), bias, and toxicity with state-of-the-art accuracy and uses masking techniques like synthetic data generation to ensure safe and effective use with LLMs and generative AI. Granica Screen helps organizations shift-left with data privacy, using an API to integrate directly into the data pipelines that support data science and machine learning (DSML) workflows.

2. Purple Llama

Purple Llama is a set of open-source LLM safety tools and evaluation benchmarks created by Meta to help developers build ethically with open genAI models. This umbrella project is continually adding new capabilities, but its current feature-set includes the Llama Guard input and output moderation model, Prompt Guard to detect and block malicious prompts, Code Shield to mitigate the risk of the LLM generating insecure code suggestions, and CyberSec Eval benchmarks for security and penetration testing.

3. Garak

Garak is a free, open-source vulnerability scanning tool for chatbots and other LLMs. It probes for multiple varieties of weaknesses that could make an LLM fail or otherwise behave undesirably. Examples include hallucinations, data leaks, prompt injections, misinformation, toxicity generation, and jailbreaks. After scanning, Garak provides a full security report detailing the strengths and weaknesses in the model, LLM-based application, or third-party integrations.

4. Vigil

Vigil, also known as Vigil LLM to differentiate it from the many other AI-related tools called “Vigil,” is a Python library and REST API used to detect threats against LLM-based technology. It assesses LLM inputs and outputs for signs of prompt injections, jailbreaks, and other targeted AI attacks. Vigil LLM is currently in alpha state, and as such it’s still a work in progress that should be used with caution.

5. Rebuff

Rebuff is an LLM threat detection tool focused primarily on preventing prompt injection attacks. It offers protection on four fronts: heuristics to filter out potentially malicious prompts before they reach the LLM; a dedicated LLM to analyze inputs and identify malicious content; a vector database of previous attack embeddings that helps recognize and prevent future attacks; and canary tokens to detect leakages and allow the framework to store embeddings in the vector database.

6. Private AI

Private AI (not to be confused with PrivateAI, which is a different product), is a data minimization solution that identifies, anonymizes, and replaces 50+ entities of sensitive and personally identifiable information. The PrivateGPT product scrubs personal information from user prompts before they’re sent to ChatGPT to mitigate the risk of accidental leaks.

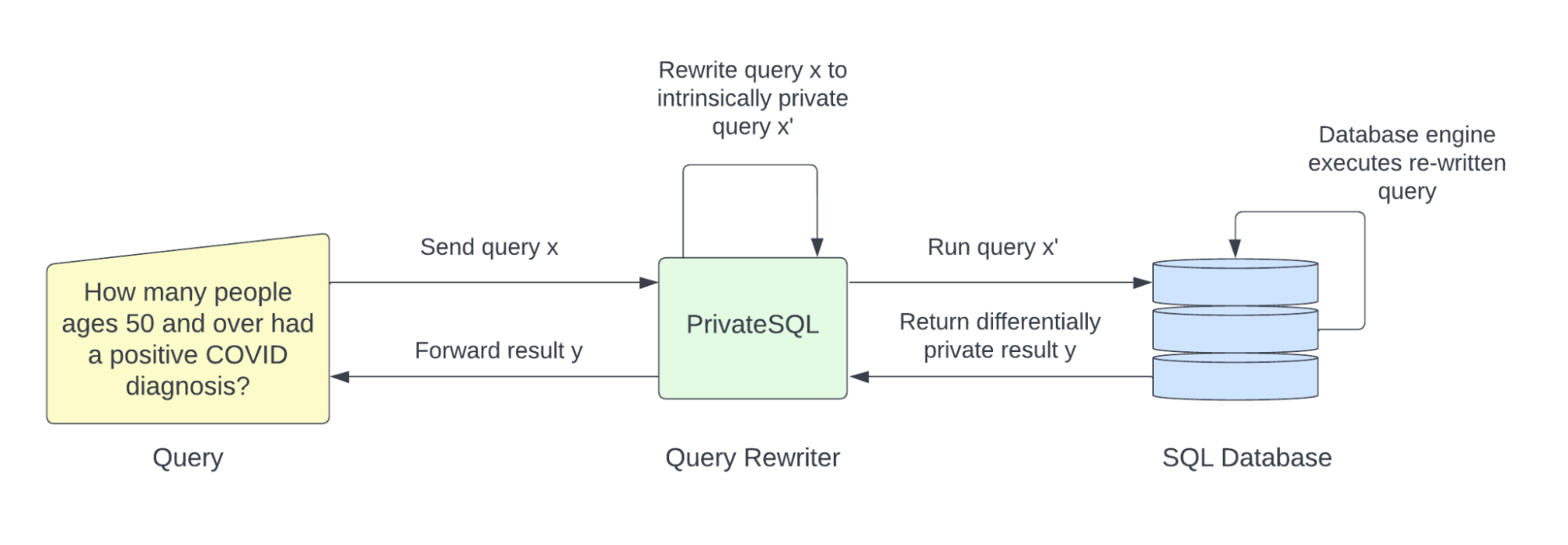

7. PrivateSQL

PrivateSQL is a differential privacy solution that automatically rewrites aggregate queries to be differentially private. Parent company Oasis Labs also offers a privacy budget tracking tool and, in partnership with Meta, released a bias and fairness detection system that employs technologies like differential privacy, multi-party computation cryptography, and homomorphic encryption.

8. Adversarial Robustness Toolbox

Adversarial Robustness Toolbox (ART) is a Python library of adversarial training tools to teach LLMs and other AI applications how to spot evasion, poisoning, extraction, and inference attacks. It also offers defense modules to harden LLM applications and supports multiple estimates, robustness metrics, and certifications. Notably, ART is used by or integrates with many other adversarial training tools, including Microsoft Counterfit.

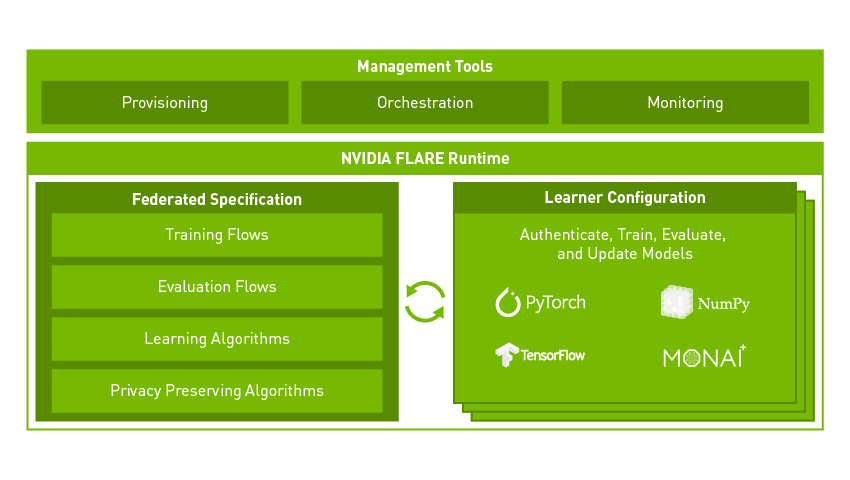

9. NVIDIA FLARE

FLARE (Federated Learning Application Runtime Environment) is an open-source, domain-agnostic federated learning SDK (software development kit) from NVIDIA. It helps companies adapt existing AI/ML architectures from PyTorch, RAPIDS, TensorFlow, and more to a federated paradigm.

10. Flower

Flower is a “friendly” framework for building federated learning systems. It’s a framework-agnostic solution that works within developers’ existing AI/ML libraries and can be extended and adapted to any use case. Flower is easy to use and can be implemented at any stage of LLM research, development, and fine-tuning.

11. Microsoft Counterfit

Microsoft Counterfit is a CLI (command-line interface) tool used to orchestrate adversarial training attacks against AI models. It does not generate the attacks themselves, but instead adds an automation layer that interfaces with adversarial training frameworks like ART to streamline the process.

How to choose the right LLM security tool for your business

Before shopping for solutions, it’s important to clearly define the capabilities you need depending on your specific use case, environment, and risk factors. For example, Flower and NVIDIA FLARE are only meant to be used in the initial stages of LLM development and won’t do anything to help a company that bought a third-party LLM chatbot application. Grancia Screen, on the other hand, protects sensitive data at any stage of LLM training, development, and usage.

Another important consideration is the difference between open source tools and enterprise LLM security solutions. Open source offerings like Garak and ART are supported by a large developer community, whereas solutions like Granica and FLARE come with enterprise-grade support contracts, so there’s someone you can call if something goes wrong and you don’t have the know-how to fix it yourself.

Ultimately, you’ll need more than one of these tools to adequately protect your LLM application, so it’s crucial that all your chosen solutions work together without leaving any vulnerabilities exposed.

Unlocking better, safer AI with Granica Screen

The Granica Screen data minimization tool for LLMs detects and masks sensitive information in training data sets, prompts, and RAG. Its novel detection algorithm is highly compute-efficient, allowing companies to protect more data for less money and eliminating the trade-off between safety and effectiveness.

To learn more about using Granica Screen and other LLM security tools to protect your AI applications, contact our experts to schedule a demo.