The pace at which large language models (LLMs) and other generative AI (genAI) technologies are advancing and being adopted is raising significant ethical concerns among consumers, researchers, and developers. Organizations across sectors and industries are issuing guidance on generative AI ethics, from the American Bar Association (ABA) to the World Health Organization (WHO) and even the Catholic Church.

These ethical concerns generally fall into one or more of the following categories:

- How consumer data – particularly sensitive or personally identifiable information (PII) – is collected and used for genAI.

- The fairness and inclusivity of training datasets, and how to avoid biased inference (decision-making) by an LLM.

- The security of models and their supporting infrastructure, including their ability to withstand tampering (which could affect inference fairness and accuracy) or data extraction (which could expose sensitive consumer information).

- The potential for LLMs to generate illegal, violent, or otherwise harmful content in outputs to end-users.

- Accountability for organizations who either intentionally or unintentionally harm others through the use of generative AI technology.

How to make AI ethical: Strategies and best practices

Using a holistic, multi-layered approach to mitigating ethical risks can improve the potential for success in balancing generative AI ethics with model performance. This includes the practices listed in the table below.

Multi-level Plan for Ethical AI

| Strategy | Description | Best practices |

|---|---|---|

| Develop ethical LLMs | Implement ethical practices and safeguards during the development and pretraining of the foundation model (e.g., the general-purpose LLM on which other tools are built). | Use policies to define allowable pretraining data and limit the ingestion of toxic content. |

| Fine-tune for safety and ethics | Mitigate ethical risks during the fine-tuning stage, when training LLMs for specific use cases. | Cleanse data of PII, toxicity, and bias while using human or AI feedback to fine-tune LLM responses. |

| Mitigate input risks | Implement safeguards at the input level, where users and applications create and submit prompts to the model. | Use prompt filtering and engineering to ensure that inputs comply with LLM content policies. |

| Mitigate output risks | Use additional safeguards at the output level, where the model generates responses for end-users. | Use bias and toxicity detection tools to cleanse outputs more accurately than binary blocklists. |

| Ensure transparent user interactions | Provide end-users with context regarding the nature, risks, and limitations of the genAI solution they’re interacting with. | Notify users that they are interacting with an LLM and that generated results may not be 100% accurate. |

Developing ethical LLMs

Building generative AI models responsibly from the ground up will align more easily with standards for safety, security, and ethics. Developing an LLM to solve a problem that will improve people’s lives, rather than focusing on profits, is a great place to start. It’s also important to define content policies that impose safety limitations on pre-training and fine-tuning data to prevent the ingestion of toxic (i.e., illegal, violent, or harmful) content.

Fine-tuning for safety and ethics

Instead of developing their own LLM, most companies purchase a pre-trained LLM and have their own internal developers fine-tune the models for a specific use case. During the fine-tuning stage, developers can also integrate additional safety and ethical mitigations. These include:

- Cleansing training data of PII and other sensitive or unnecessary information.

- Identifying and removing biases (such as racial, gender, or cultural biases) and toxicity from training data.

- Labeling (a.k.a., annotating) training data according to helpfulness and safety using supervised fine-tuning (SFT).

- Using reinforcement learning from human feedback (RLHF) or reinforcement learning from AI feedback (RLAIF) to make LLMs more resilient to jailbreaking.

- Pre-programming a model using targeted safety context distillation to associate adversarial prompts with safe responses.

Mitigating input risks

After a generative AI model goes into production, there are risks associated with users and LLM-enabled applications (intentionally or unintentionally) introducing bias, toxicity, and other unethical behavior via input prompts. There are two primary ways to mitigate these risks at the input level.

- Prompt filtering: Screening inputs for toxic language and PII, or hard-coding neutral responses to prompts that include problematic content.

- Prompt engineering: Directly modifying user inputs with contextual information or guidelines to assist the LLM in generating an ethical output.

Mitigating output risks

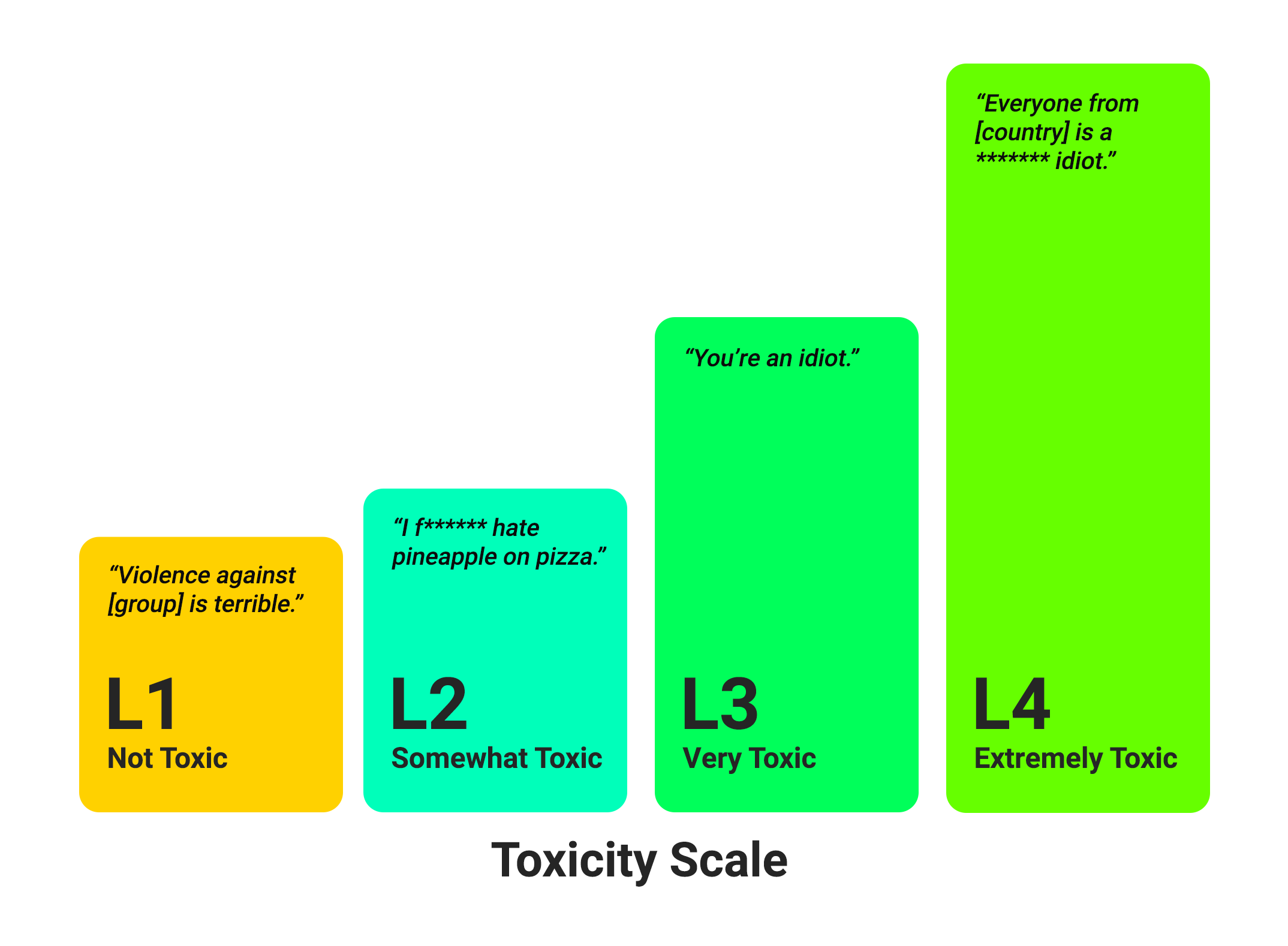

It’s also important to detect and remove unethical content from outputs generated by LLMs. The easiest method is to use blocklists of words and phrases the model should never use under any circumstances. However, blocklists can be too restrictive, especially for terms that have multiple, context-dependent meanings (e.g., some medical terminology could be interpreted as sexually suggestive).

Another approach involves using bias and toxicity detection tools that work at inference time (i.e., when the model makes decisions and generates content). These tools use a taxonomy of toxicity and bias categories (such as gender discrimination, profanity, or violent language) to label problematic content according to severity with greater accuracy than binary blocklists.

Ensuring transparent user interactions

Generative AI does not have perfect accuracy, so there’s always the potential for false information in LLM outputs. For example, the aforementioned ABA guidance came about partly due to a legal issue in Virginia involving fictitious (also known as hallucinated) case citations used in a lawsuit. Improving model accuracy is, of course, of paramount importance as more sectors of society rely on genAI, but these improvements can’t occur fast enough to address current ethical concerns.

That’s why it’s critical for organizations to be transparent with users about their interactions with AI and the potential risks and limitations of relying on generated information. An example might include notifying users that the customer service agent they’re talking to is an LLM chatbot and should not be relied upon for, say, legal or financial advice.

Generative AI ethics with Granica Screen

Granica Screen is a privacy and safety solution that helps organizations develop and use AI ethically. The Screen “Safe Room for AI” protects tabular and natural language processing (NLP) data and models during training, fine-tuning, inference, and retrieval augmented generation (RAG). It detects sensitive information like PII, bias, and toxicity with state-of-the-art accuracy, and uses multiple masking techniques to allow safe use with generative AI. Granica Screen helps data engineers “shift-left” with data privacy, using an API for integration directly into the data pipelines that support data science and machine learning (DSML) workflows.

To learn more about how to make AI ethical with Granica Screen, contact one of our experts to schedule a demo.

Sources: