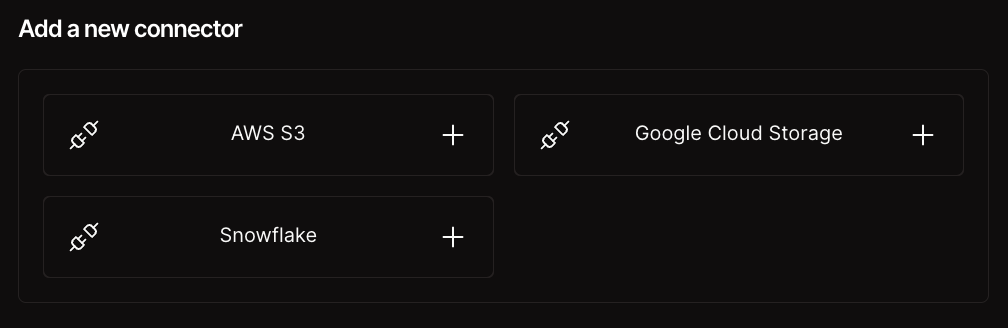

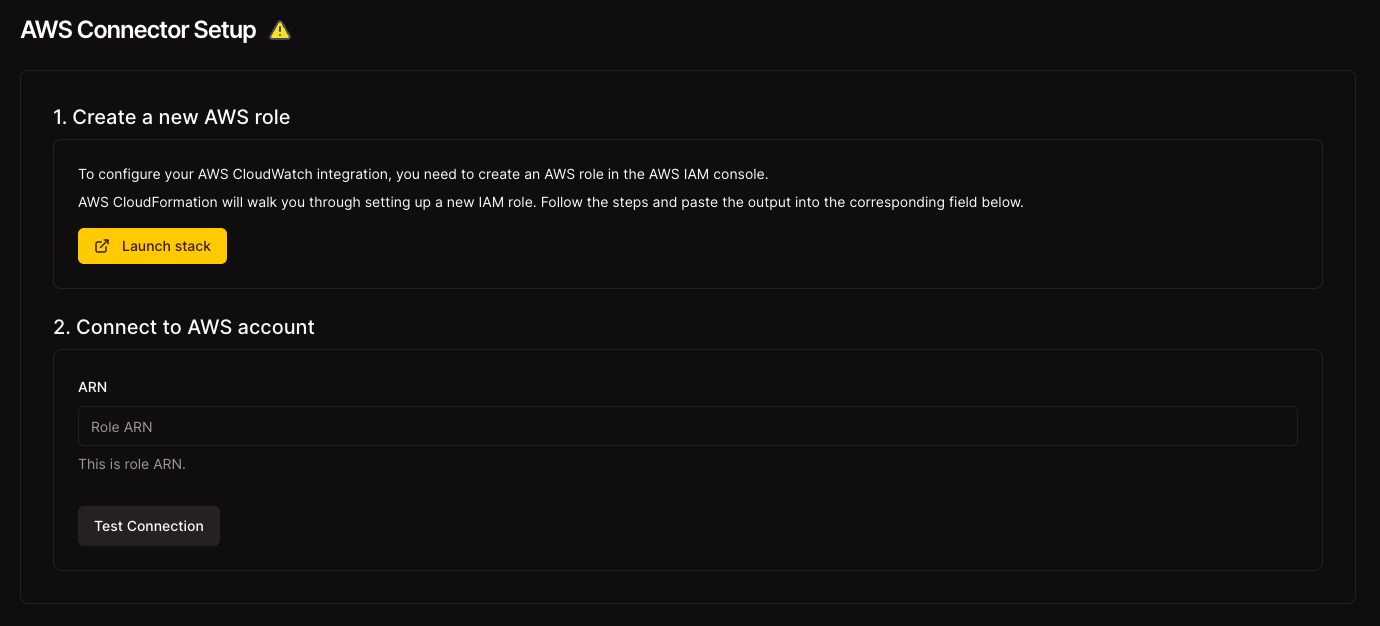

Granica Chronicle AI is a training data visibility service which facilitates Amazon and Google data lake exploration and cost optimization, unlocking budget for reallocation to strategic AI areas such as acquiring and using more training data.

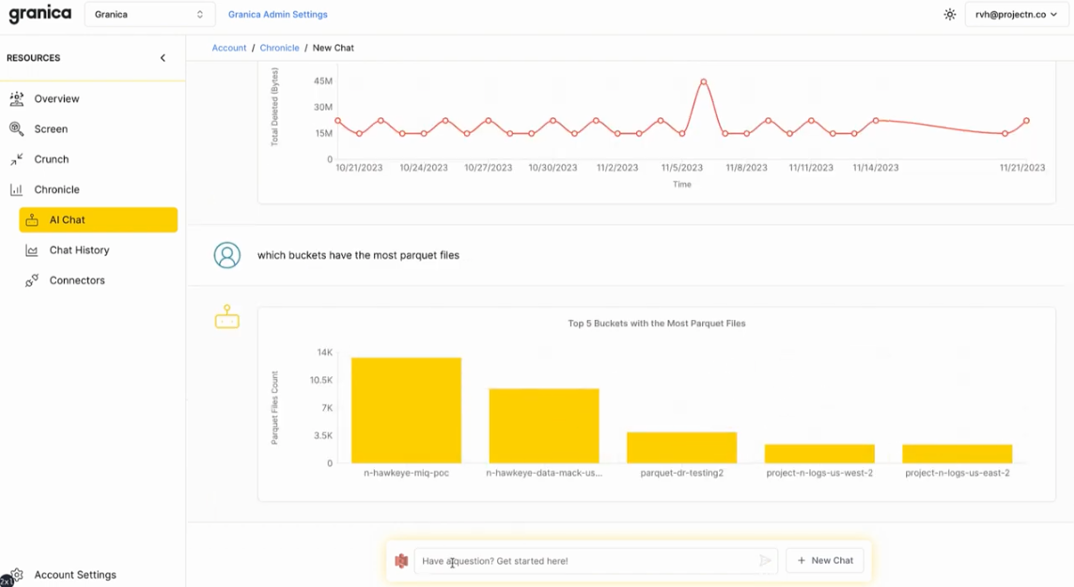

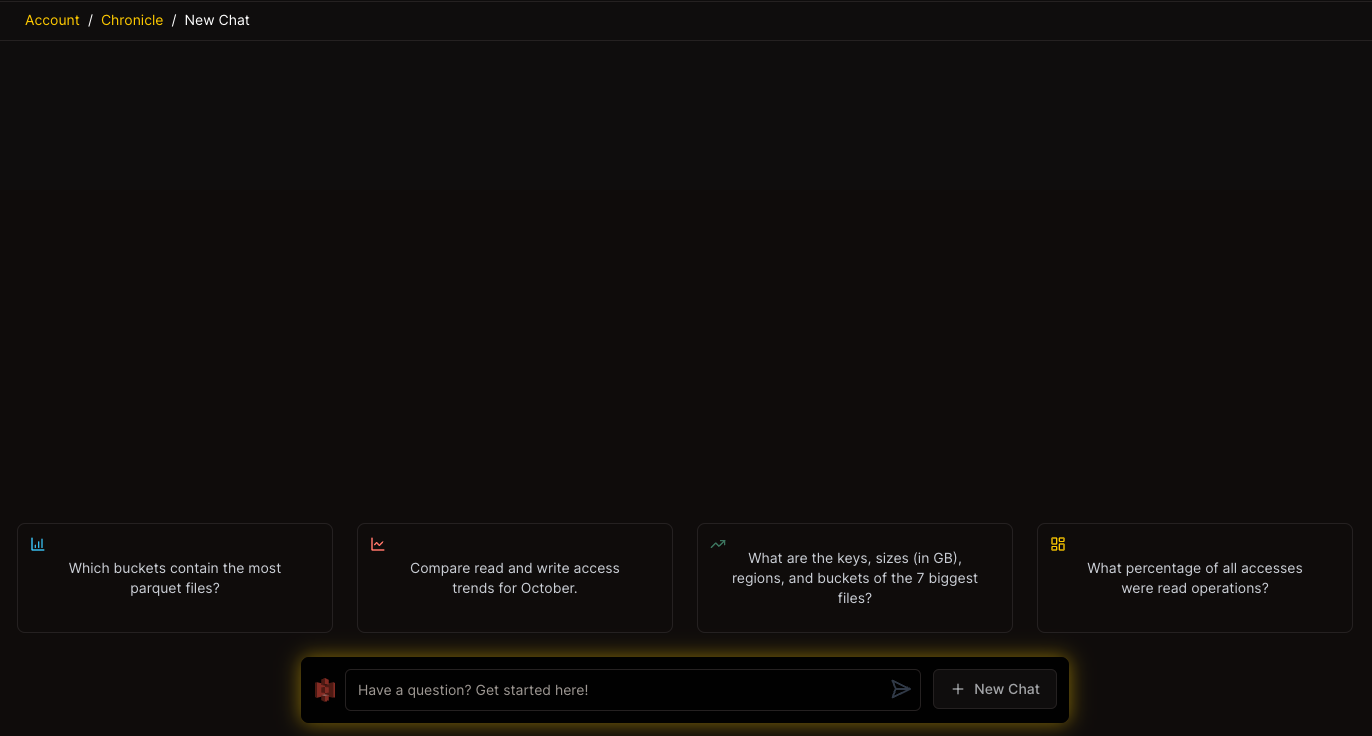

Explore your data environment with genAI-powered prompts for fast, actionable insights - no SQL required.

Identify top opportunities for compression-related savings with Granica Crunch, find and remediate costly and inefficient data lifecycle policies, and more.

Use the freed up funds to improve model performance by adding and using more training data, increasing compute or other means.

Explore your data environment with genAI-powered prompts that generate relevant visualizations in graphs and tables to uncover actionable insights, fast.

Use natural language to ask questions of your data lake buckets and files and get answers.

Discover valuable training data, understand usage and access patterns for AI applications, and more.

Get relevant visualizations in graphs and tables - no SQL, CLI, or dashboard creation required.

Data types supported

Granica Chronicle AI supports any and all file types in your data lake.

Text/NLP

Clickstream/Logs

Tabular

LiDAR/Image

Maximize compression-related savings with Granica Crunch by prioritizing data lake buckets and files for crunching based on typical compression rates, historical access patterns etc. Reduce data at-rest and access costs by optimizing lifecycle tiering policies and storage classes given historical access patterns. Improve application latency and throughput while reducing access costs by identifying and remediating sub-optimal prefix/read approaches. Re-allocate the savings each month to improve model performance by adding more training data or increasing training compute.

Maximize model performance with Granica Crunch insights. Where can we reallocate savings for AI/ML enhancements?

Discover training data effortlessly with genAI. How can we use it to boost our AI/ML capabilities?

Optimize AI/ML workflows with genAI. What insights can help us improve model training and overall performance?

Tap into genAI for data insights. What patterns or trends can we uncover to enhance analytics efficiency?

Optimize data access for analytics. How can we use genAI to streamline and improve our analytics workflows?

Enhance analytics performance with Granica Crunch. Where do we see potential for optimization in our data landscape?

Optimize data lifecycles with Granica Crunch. How can we streamline data engineering workflows for efficiency?

What insights can help us improve the efficiency of our data processing?

Explore data landscapes effortlessly. How can we use genAI to optimize storage and data processing for better performance?

Uncover cost-saving potentials with Granica Crunch. What areas can we optimize to enhance financial efficiency?

Utilize Chronicle AI to visualize spending patterns. How can we strategize budget reallocation for financial optimization?

Explore actionable insights in financial data effortlessly. How can genAI enhance our approach to budget management?

Identify security risks. How can we enhance our data security by addressing potential vulnerabilities?

Explore suboptimal access approaches. How can we bolster our security measures with insights from Granica Crunch?

Utilize Granica Crunch to strengthen security. What steps can we take to mitigate risks and optimize data protection?

Yes, Chronicle is file type agnostic and can query the metadata associated with all files in your data lake, with a focus on access metadata ingested from your cloud storage/server access logs.

Unlike traditional cloud data lake storage visibility tools which are focused on high level metrics such as data volumes, object counts and sizes, and operation (e.g. GET/PUT) counts, Chronicle AI provides rich insight into access patterns and file types - i.e. how specific users and applications (i.e. IAM roles) are actually utilizing the data. And Chronicle AI delivers this visibility via a user-friendly gen AI-powered prompt interface, enabling anyone to explore without having to create custom SQL, pipelines, or dashboards.

Build better AI through (much) better visibility into how your training data is being used and how to optimize it for both cost and performance.