Granica Crunch is the world’s first cloud cost management solution purpose-built to help data engineers lower the cost of their lakehouse data. It goes beyond FinOps observability to automatically remediate data-related inefficiency, lowering cloud storage costs by up to 60% and speeding queries by up to 56%.

It’s hard to control cloud costs for ever-growing data while experimenting with AI. Even with low-cost cloud object storage backing your data lakehouse, a single PB of Parquet data has an annual at-rest cost of nearly $300k/year.

Tiering into colder, less expensive cloud storage classes doesn't work for analytical and training data given it is regularly accessed. Manually optimizing the compression and encoding of Parquet to lower cloud costs is complex and risky.

What data teams need is a way to automatically optimize compression of Parquet to lower cloud costs and speed access, analogous to how query optimizers increase query performance. We created Granica Crunch to solve this problem.

60%

Crunch shrinks the physical size of the columnar files in your cloud data lakehouse, lowering at-rest storage and data transfer costs by up to 60% for large-scale analytical, AI and ML data sets.

60%

Smaller files also make cloud data transfers and replication up to 60% faster, addressing AI-related compute scarcity, compliance, disaster recovery and other use cases demanding bulk data transfers.

56%

Smaller files are also faster files - they accelerate any data pipeline, query or process bottlenecked by network or IO bandwidth. Up to 56% faster based on TPC-DS benchmarks.

1 Petabyte Data Lakehouse (Parquet)

| Year 1 | Year 2 | Year 3 | Total | |

| Data lakehouse storage costs before Granica | $0.26M | $0.34M | $0.45M | $1.05M |

| Data lakehouse storage costs after Granica | $0.15M | $0.20M | $0.26M | $0.60M |

| Gross savings generated by Granica Crunch | $0.11M | $0.15M | $0.19M | $0.45M |

* Assumes 30% data growth; 43% compression rate on TOP of Snappy-Parquet

25 Petabyte Data Lakehouse (Parquet)

| Year 1 | Year 2 | Year 3 | Total | |

| Data lakehouse storage costs before Granica | $6.6M | $8.6M | $11.2M | $26M |

| Data lakehouse storage costs after Granica | $3.8M | $4.9M | $6.4M | $15M |

| Gross savings generated by Granica Crunch | $2.8M | $3.7M | $4.8M | $11M |

Slash the cloud storage costs for your Parquet data lakehouse files. Use our simple 2-input calculator to get a preliminary estimate.

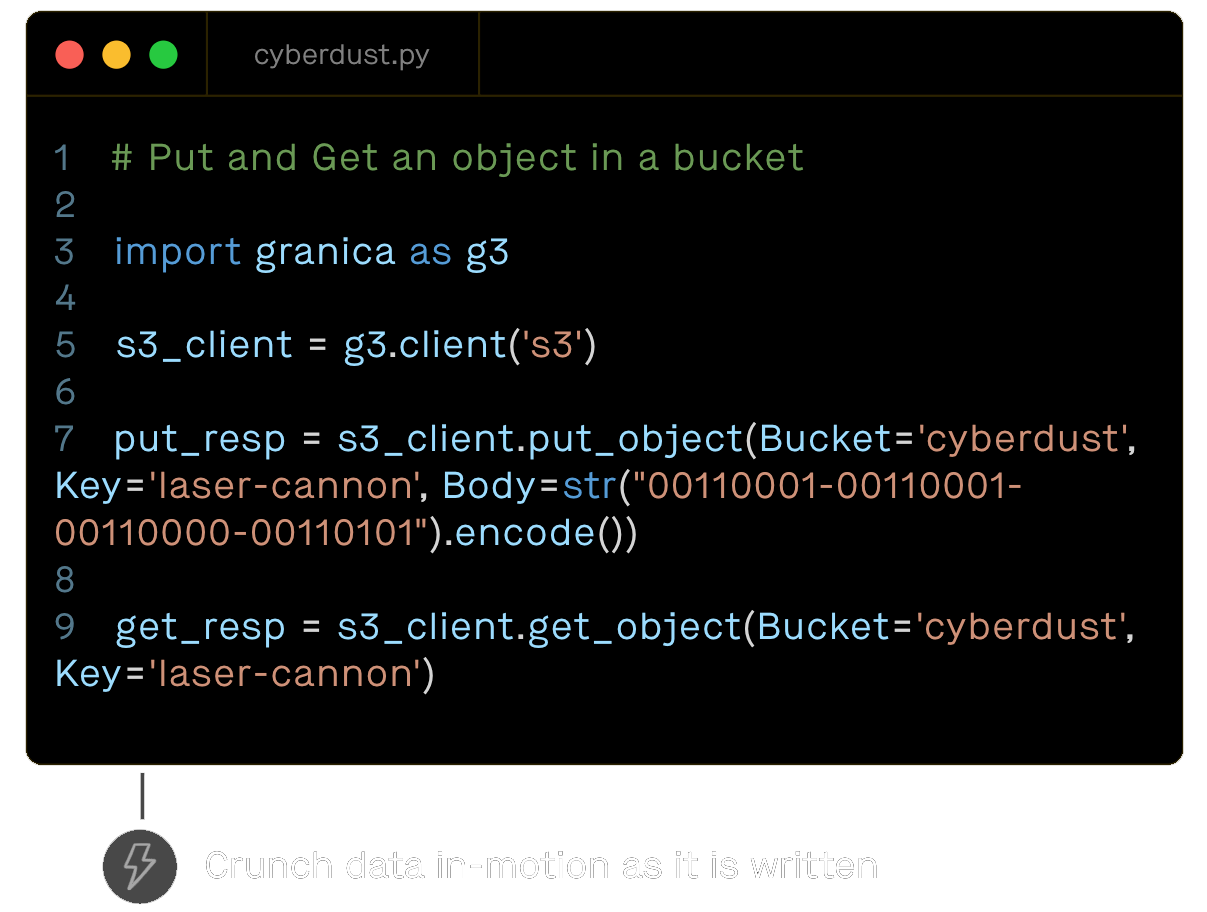

Granica Crunch isn't your typical cloud cost management solution. It moves beyond FinOps observability to automatically mitigate data-related inefficiency — data engineers simply set it and forget it. Crunch continuously optimizes the compression of columnar data lake formats such as Apache Parquet to control cloud costs, speed processing, and improve developer/data engineer productivity and efficiency.

whitepaper

Read Our Latest White Paper "Building Trust, Impact and Efficiency into Traditional and Generative AI

Granica’s cloud cost management platform helps reduce your unit economic cost to store lakehouse data. Capture recurring savings relative to your pre-Granica baseline each and every month, not just once. Ten petabytes of existing columnar data typically translates into an annual gross savings of ~$1.2M, scaling as your data lakehouse grows. Crunch helps you meet your most crucial cloud cost optimization KPIs for both cost and performance.

What’s truly meant by the term AI-ready data, and what are the best approaches to successfully deliver AI-ready data aligned to AI use-cases? Download Gartner's latest research report to learn how AI efforts are evolving the data management requirements for organizations, compliments of Granica.

g ~/ granica deploy

Success!

g ~/

Granica Crunch typically reduces columnar data-related cloud storage costs by 15-60%, depending on your data. In TPC-DS benchmark tests using Snappy-compressed Parquet as the input data, Crunch delivered an average 43% file-size reduction. The reduction rate directly translates into gross savings on an annual basis. If you’re storing 10 petabytes of AI data in Amazon S3 or Google GCS that translates into >$1.2M per year (growing as your data grows).

Granica Crunch uses a proprietary compression control system that leverages the columnar nature of modern analytics formats like Parquet and ORC to achieve high compression ratios through underlying OSS compression algorithms such as zstd. The compression control system combines techniques like run-length encoding, dictionary encoding, and delta encoding to optimally compress data on a per-column basis.

Granica Crunch is NOT in the read or write path. It works in the background to adaptively optimize the OSS compression and encoding of your columnar lakehouse files stored in Amazon S3 and/or Google Cloud Storage. Apache Spark and Trino-based applications continue accessing the newly-optimized files normally. Query performance actually improves as there are fewer physical bits to read and transfer from object storage to the query engine. TPC-DS benchmarks show an improvement of 2-56% depending on the query.

Yes, your production applications continue to read and write data as they normally would. Granica Crunch is fully compatible with Apache Spark and Trino query engines utilizing Apache Parquet, including any BI tools querying those engines. Granica-optimized tables can be queried using standard SQL without any modification.

We plan to enhance Granica Crunch to support other major query engines such as Presto, Hive, BigQuery, EMR, Databricks etc., support for other columnar formats such as ORC, and integration with popular data catalogs and schema registries.

Granica Crunch is licensed and priced based on the volume of compressed data stored per month, measured using uncompressed source bytes. Granica charges a minimum monthly commitment which allows you to crunch up to 1PB of source data. Thereafter, each incremental terabyte of source data crunched is billed at a $ per TB rate. Please contact us for a custom quote based on your environment.

Check out our detailed Crunch FAQ and documentation.

Harness the inefficiency in petabyte-scale lakehouse data to improve the ROI on AI. Granica’s cloud cost management platform optimizes cloud storage spend while boosting pipeline performance and developer/OPEX efficiency.