The RSA Conference is the largest cybersecurity conference of the year, with an estimated 45,000 practitioners, researchers, business leaders, and government officials in attendance. This year's conference did not disappoint. We were impressed with the rate of progress across the board from advances in areas like network security and endpoint protection to application security and autonomous penetration testing. In case you did not have the chance to attend, we thought it would be useful to give a quick summary of our biggest learnings and takeaways from this year's conference.

AI In Cybersecurity

To no surprise, AI dominated conversations in the Expo Hall and discussion topics in hosted Conference Sessions. After all, the fundamental underpinning of cybersecurity is to protect systems, networks, devices, and data from threats. When new technological paradigms come into existence, the surface area(s) vulnerable to cyber attacks only expand.

In the context of cybersecurity, AI has several different associations, business applications, and implications. We find the below framework useful for thinking through this.

| Business Application / Implication | Description |

|---|---|

| The Security Of AI And Its Applications | Enhancing the security posture of critical AI systems, including training data, APIs and enterprise applications, and the models and LLMs themselves. |

| AI-Powered Cybersecurity Solutions | Using advanced AI to enhance and automate security technologies such as detection, response, anomaly monitoring, penetration testing, and more. |

| Protecting Against AI-Driven Cybersecurity Attacks | Solutions to prevent and defend against AI-driven phishing, deepfakes, malware generation and evasion, automated vulnerability discovery, credential stuffing, and distributed denial of service attacks. |

Cybersecurity vendors and their respective solutions generally cover one or several of the above business applications and implications. At Granica for instance, our privacy engineering efforts are predominantly focused on the security of AI and its applications, but our technologies leverage advanced AI-powered techniques for increasing degrees of sophistication.

So What's The Big Deal?

Enterprises, governmental and regulatory agencies, and consumers alike are exploring and utilizing generative AI for various purposes. McKinsey estimates that generative AI alone could add the equivalent of $2.6 trillion to $4.4 trillion annually to the global economy. Given its economic potential, we expect interest and experimentation to only increase.

Full realization of this technology's benefits, however, will take significant time and there are considerable cybersecurity challenges to address, including data privacy and model security.

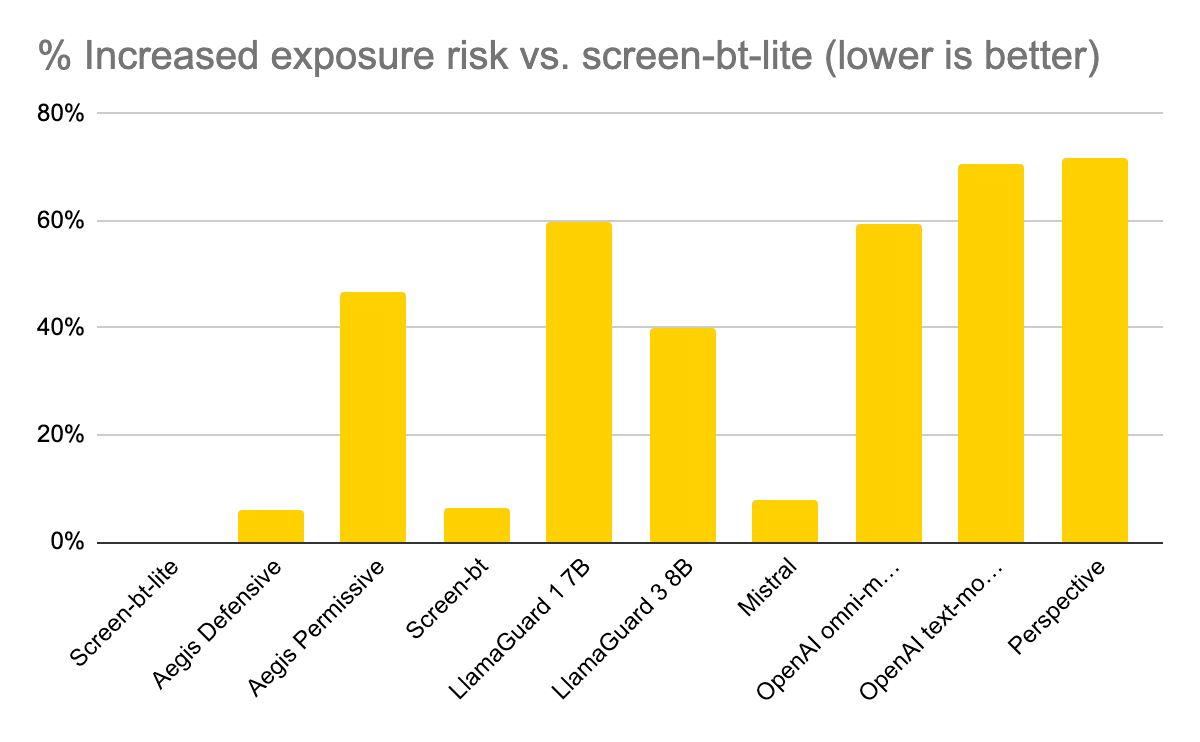

Source: Security For AI, Menlo Ventures

Our Top Takeaways

We've established frameworks for thinking about AI in cybersecurity, AI's potential, and what we (may) stand to lose. Now, let's dive into the most relevant takeaways derived from the conference.

- The Need For Comprehensive AI Data Security: Given the unprecedented growth of structured and unstructured data, CISOs must understand the full inventory of sensitive data their organization may possess, including the relative sensitivity of these data assets and how this data is being accessed and utilized. Once visibility into an organization's data landscape is established, implementing effective access governance and cataloging capabilities becomes table stakes.

- Role Based Access Control Is More Important Than Ever: AI systems, including underlying training datasets and the models themselves, deal with sensitive data. Organizations must monitor who has access to these systems, prevent unauthorized access, and ensure they are following regulatory compliance controls. Security leaders want to understand the totality of AI systems being used across their organization and who should have access to these systems. Role based access control streamlines access management by organizing users into their roles based on job functions or responsibilities, simplifying the process of granting or revoking access rights.

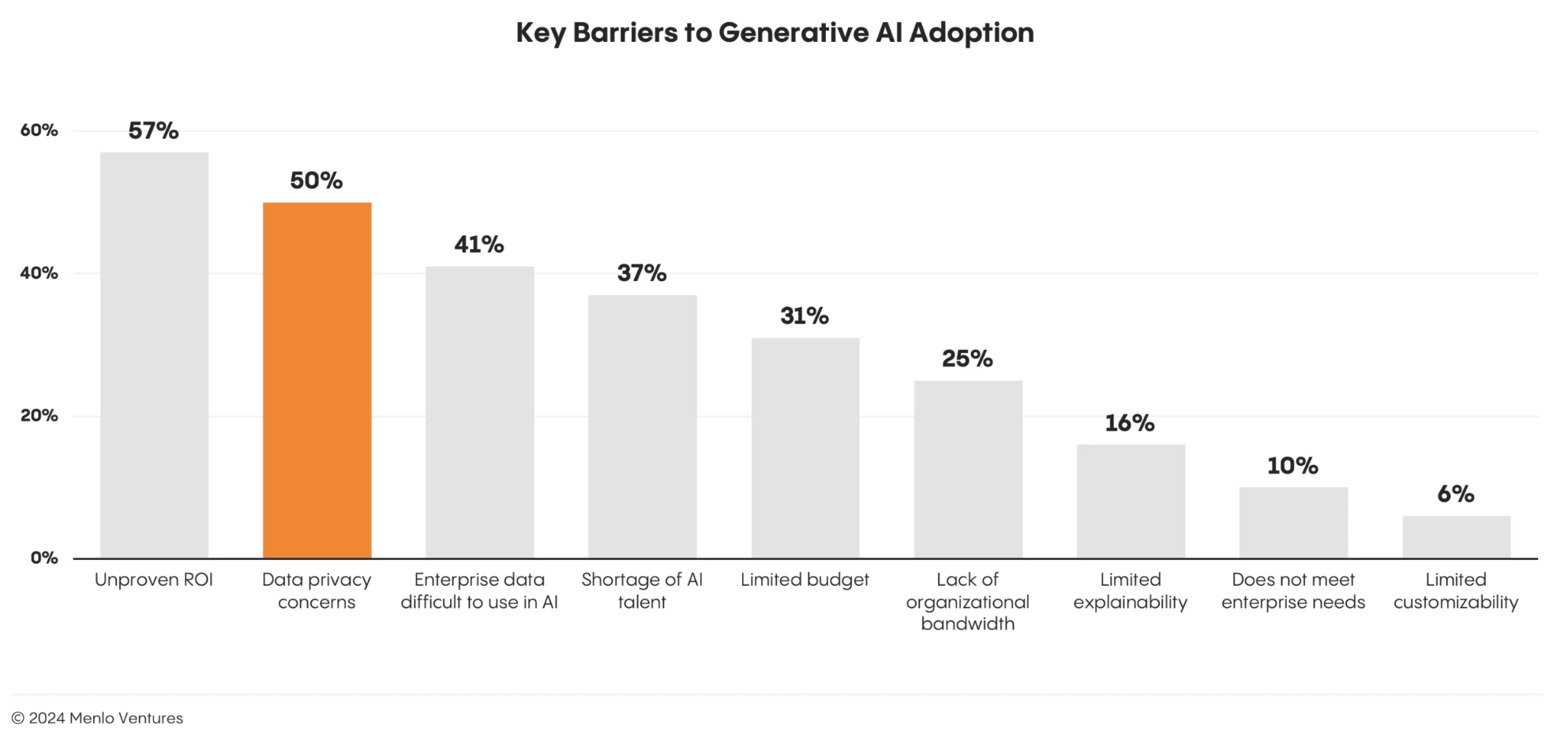

- Privacy And Runtime Security Are The Biggest Risks For LLMs: Business leaders are reluctant to adopt generative AI due to ongoing concerns about data privacy, control, and runtime risks. LLMs are trained on large datasets, and pose the risk of inadvertently memorizing sensitive information present in training data. Additionally, prompt injections, lack of response evaluation, and data poisoning warrant upgrades to existing API security infrastructure. Thus, there is a resurgent interest in runtime protection and detection and response capabilities to provide visibility into the behavior of large language models, and monitor for anomalies.

- CISOs Need Help, Big Time: In the era of AI, the role of the CISO is evolving and becoming more challenging. Key constraints include a shortage of cyber talent, struggling legacy systems and technical debt, and strict operating budgets. Thus, CISOs must optimize for a given risk tolerance threshold under these existing constraints. Opportunities exist for vendors to make the lives of CISOs easier with AI-driven automation to increase the efficacy of existing systems and address headcount shortages, as well as offering a comprehensive platform that allows them to retire legacy systems and reduce the number of tools they must currently manage.

- Platformization And Consolidation Of Vendors: Cybersecurity vendors may initially target a somewhat narrow use case, such as AI data security posture management, but will gradually look to expand their breadth and depth of use cases towards enabling or directly addressing things such as access governance, detection response, and real-time monitoring. The strategic decision lies in building these capabilities natively or creating requisite integrations and connectors with surrounding systems in the broader IT stack. Larger, more established vendors will look to M&A opportunities to execute on these strategies and capitalize on special situations (eg. Wiz and Lacework).

Concluding Thoughts

Overall, it was an extremely informative and productive conference. We walk away feeling inspired yet cognizant of the challenges and risks that exist in AI cybersecurity today. We look forward to continuing to expand our breadth and depth of solutions aimed at securing AI and its applications for our customers. Hope to see you at the conference next year! 👋

Granica team members Mack Yi (Head of Privacy Engineering) and Diego Acosta (Business Operations Lead) attend RSA.