As more organizations integrate AI and machine learning, they need to store greater quantities of data in cloud data lakes. However, this data is often costly to store, compute, and transfer. Using less data isn’t an option for cost savings, either, because machine learning relies on extensive libraries of high-quality data. In fact, 70% of data analytics professionals say that data quality is the most important issue organizations face today.

Data cost optimization can help businesses reduce data costs without sacrificing quality. Strategies like cost allocation, tiering, and compression work together to keep cloud data lake storage costs as low as possible. We’ll explore some of these strategies in detail below.

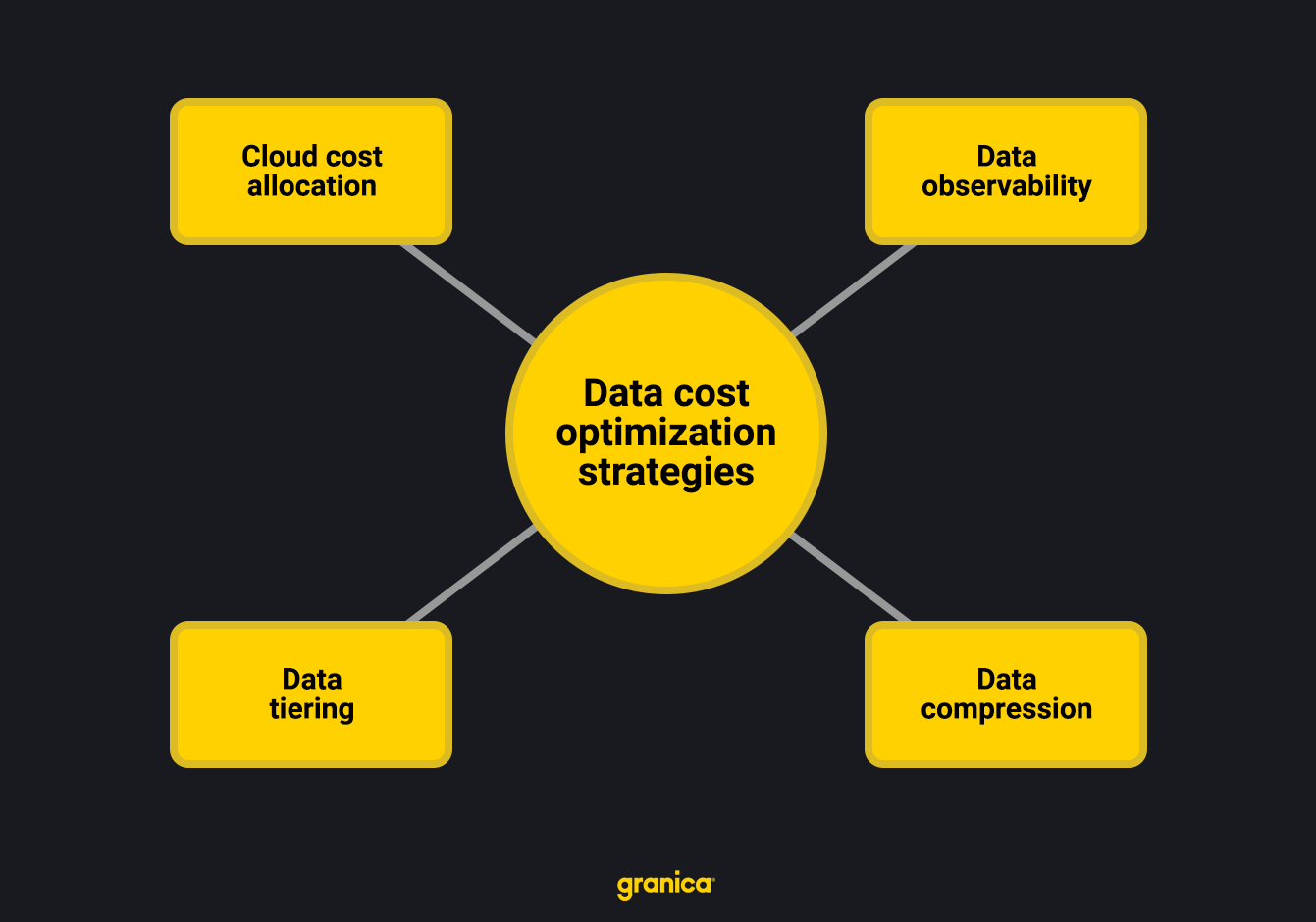

4 Data cost optimization strategies

Data cost optimization involves multiple steps in a long-term, continuous process that ideally starts with teams shifting-left by designing efficient data architectures from the ground up. Organizations don’t need to scrap their existing architectures and start from scratch to control their data costs, however. The following data cost optimization strategies can help reduce monthly costs regardless of your starting point.

1. Track spending with cloud cost allocation

Cloud cost allocation involves identifying, labeling, and tracking costs across cloud departments so a company can track monthly expenditures. Cost allocation is a cloud cost management best practice that helps demystify cloud bills and allows teams to see how much they spend on cloud data storage and transfer. With this information in hand, it’s easier to determine which optimization techniques will be most effective for reducing costs.

2. Maintain quality with data observability

Data observability is the process of managing data to ensure it’s reliable, available, and high-quality. This prevents poor-quality data from disrupting outcomes. Observability also reduces data costs by ensuring organizations only pay for the most useful data in cloud data lakes. Organizations can delete non-valuable data or move it to archival or other cold storage locations that are less expensive to maintain.

To reduce data costs, focus on these data observability strategies:

![]() Identify all data and resource utilization.

Identify all data and resource utilization.

![]() Standardize workflows and data management processes.

Standardize workflows and data management processes.

![]() Analyze data using data analysis tools to assess quality.

Analyze data using data analysis tools to assess quality.

![]() Detect data anomalies using automated alerts.

Detect data anomalies using automated alerts.

![]() Review data quality on a regular basis (at least once per month).

Review data quality on a regular basis (at least once per month).

3. Prioritize storage costs with data tiering

Data tiering prioritizes data based on utility and frequency of required access. Useful and frequently accessed assets like AI training data are kept in standard-tier cloud storage – the most expensive and easiest to access – while the rest goes into cheaper archival storage.

In practice, however, cloud architects often prefer to keep the vast majority of data in the standard tier because it’s faster, has SLAs for the highest availability, is easily accessible, and incurs fewer data transfer charges. As a result, data tiering on its own usually isn’t enough to make a notable reduction in cloud costs.

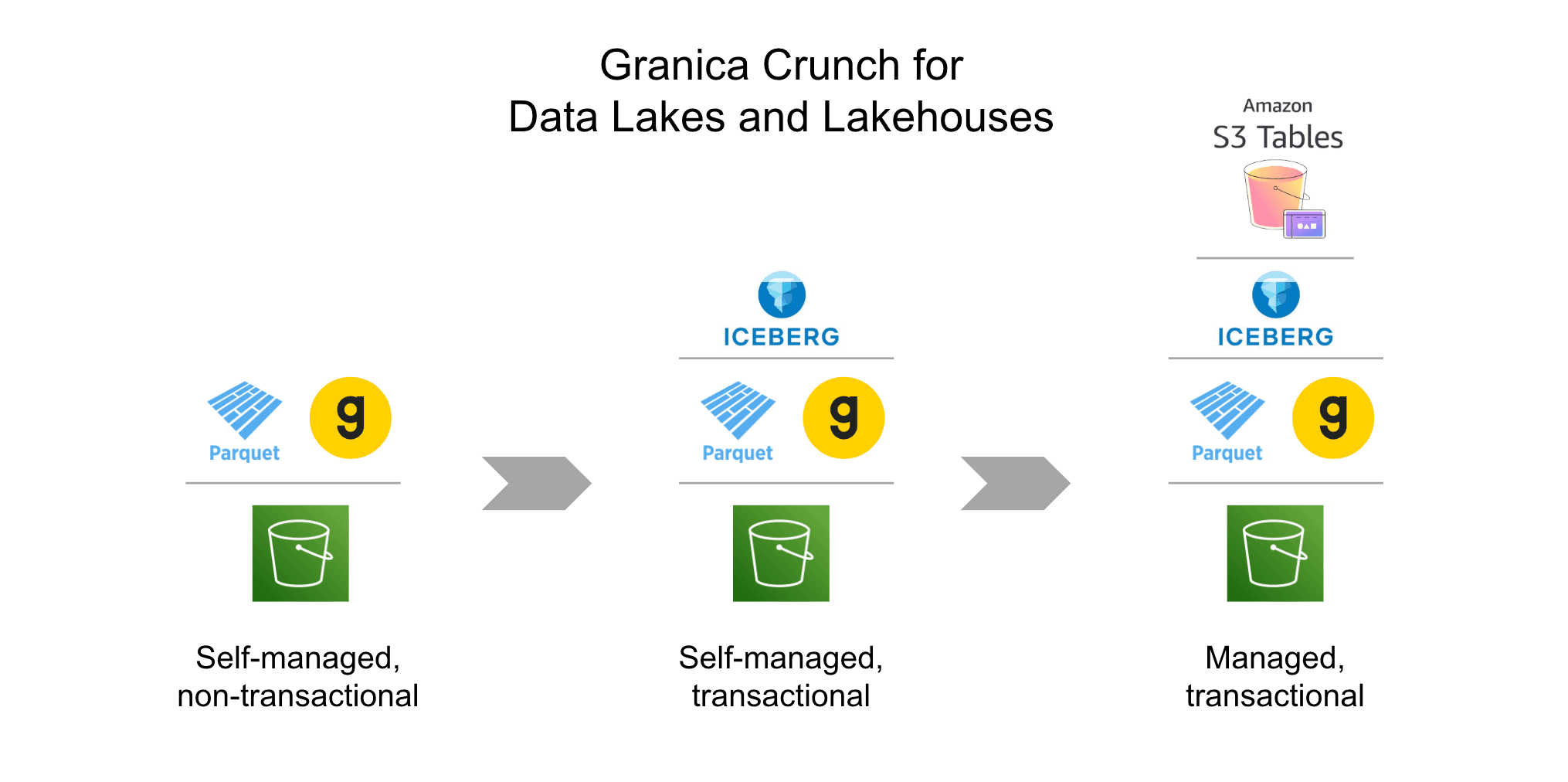

4. Shrink file sizes with data compression

Data compression reduces the physical bit size of data files, especially the Apache Parquet files which form the foundation of cloud data lakes and lakehouses, thereby reducing the amount of space they take as well as the cost to store them. It also decreases the size and bandwidth of data transfers and replication across regions and clouds and speeds up application performance for apps bottlenecked by data lake read throughput. Fewer bits take less time to move, and decompression is also faster than data transfer speeds, resulting in overall faster performance. There are two primary types of data compression:

![]() Lossless. This compression method reduces the size of data objects and files without changing the quality of the data. This is the most widely applicable form of data compression because it enables organizations to use the exact same data at a reduced storage cost.

Lossless. This compression method reduces the size of data objects and files without changing the quality of the data. This is the most widely applicable form of data compression because it enables organizations to use the exact same data at a reduced storage cost.

![]() Lossy. This method works best for image files and LiDAR (Light Detection and Ranging) point cloud data sets. Lossy compression reduces the file size but changes the file in the process. Organizations must employ lossy compression with caution, as it can negatively impact downstream usage. Sophisticated compression tools can perform lossy compression without disrupting downstream usage, particularly for AI and ML.

Lossy. This method works best for image files and LiDAR (Light Detection and Ranging) point cloud data sets. Lossy compression reduces the file size but changes the file in the process. Organizations must employ lossy compression with caution, as it can negatively impact downstream usage. Sophisticated compression tools can perform lossy compression without disrupting downstream usage, particularly for AI and ML.

However effective, these strategies can be difficult to implement long-term. Large-scale enterprises may find them particularly challenging to maintain, as they often use more data, resources, and instances than small-scale enterprises. Organizations with small IT teams may struggle if engineers lack the time or resources to practice consistent data management.

In both cases, data cost optimization tools can help. Visibility tools assist with data observability and tiering, while data lakehouse-optimized, lossless compression tools immediately reduce data costs without impacting downstream usage. The best tool – Granica Crunch – combines sophisticated lossless and lossy compression algorithms to improve data lake storage efficiency.

How Granica shrinks cloud data lake costs

Granica Crunch is a cloud data cost optimization platform that’s purpose-built to help data platform owners and data engineers lower the cost of their data lake and lakehouse data. It uses novel, state-of-the-art algorithms that preserve critical information while reducing storage costs and minimizing compute utilization. Key Crunch characteristics:

-

Adaptive Compression: Proprietary, state-of-the-art algorithms dynamically adapt to the unique structure of an organization’s big data sets, even as their data evolves.

-

Efficient Storage Footprint: Crunch transforms existing Parquet files into significantly smaller Parquet files, reducing the size and cost of large-scale data sets by up to 60%.

-

Faster, Efficient Queries: Compressed data requires fewer read / write operations, accelerating query performance by up to 56% while also reducing I/O and compute costs. Crunch compression is particularly beneficial for I/O intensive workloads.

-

Efficient Data Transfer: Compressed data reduces the amount of data transmitted over the network, leading to lower network latency, faster throughput and faster query responses in environments where network bandwidth is a limiting factor.

-

Parquet and Spark-Native: Supports the OSS Parquet standard with zero changes to Parquet reader applications. Crunch is not in the read path.

-

Multi-Cloud Support: Native support for Amazon S3 and Google Cloud Storage data lakes and lakehouses.

-

Highly Secure: Crunch deploys within an organization’s VPC (Virtual Private Cloud), respecting all security controls.

Crunch can decrease data storage costs by up to 60% – even for large-scale data science, machine learning, and artificial intelligence datasets.

Request a free demo to learn how Granica’s cloud data cost optimization platform can help you reduce cloud data lake storage costs by up to 60%.