Moving into the second half of 2024, companies continue to focus heavily on data privacy concerns, especially as the proliferation of generative AI (genAI) expands. According to a recent survey by HiddenLayer, 77% of businesses experienced an AI-related breach in the last year[1].

In addition, the increasing prevalence of breaches caused by human error, ransomware, and AI-powered threats makes it clear that data privacy and security practices must evolve. This post discusses four emerging data privacy trends influencing the tech industry that can help companies protect their most valuable data in 2024 and beyond

4 Data privacy trends shaping the future of tech

1. Privacy concerns over deep fake technology drive new laws and protective measures

The current controversy over OpenAI’s alleged copying of Scarlett Johansson’s voice for its new “Sky” GPT-4o chatbot[2], which followed closely behind Taylor Swift’s deep fake nightmare in January[3], continues to push AI privacy concerns to the forefront of public attention. AI’s ability to mimic voices and seamlessly superimpose individual faces onto other people’s bodies - or even entirely fabricated content - represents a significant threat to personal privacy.

Cybercriminals are even using deep fake technology for sophisticated social engineering schemes, as in the case of a Hong Kong finance company that paid out $25 million in February to an “executive” after a deep fake video conference call[4]. It’s only a matter of time before headlines break the news of a significant data breach as the likely result.

Deep fakes have the potential to cause significant financial and personal harm to everyday people, but high-profile celebrity incidents are forcing lawmakers to pay careful attention to such public cases.

Twenty U.S. states have already passed laws targeting deep fakes (primarily for sexually explicit content or elections), with more legislative proposals pending at both state and federal levels. In addition, the EU’s new AI Act attempts to regulate deep fake technology by requiring creators to identify AI-generated content [5].

Deepfake Laws by Country/State

As the regulatory landscape continues evolving to address deepfake privacy threats, AI developers and organizations using genAI tools for legitimate reasons may face greater constraints that could negatively impact business outcomes and ROI.

The tech industry’s response to the threat of deepfakes includes developing AI-powered detection tools, like Intel’s FakeCatcher or Onfido’s Fraud Lab, to help identify AI-manipulated content and prevent harmful images and videos from proliferating.

In addition, social media platforms, including Meta, are imposing stricter policies on identifying AI-generated images and videos in advance of the 2024 Presidential election[6]. As deepfake technology continues to grow more and more sophisticated, the market for solutions to prevent and detect deepfakes will likely expand and evolve as well.

2. Issues with AI bias place greater focus on training data quality over quantity to improve outcomes

An AI’s “intelligence” develops as the result of ingesting and analyzing massive quantities of data. Theoretically, the more data you put into an AI, the smarter it gets. However, a focus on scraping as much training data as possible has created significant security and privacy concerns (further discussion below). This quantity-over-quality approach has exacerbated the ongoing issue of AI model bias, which affects how artificial intelligence software determines access to healthcare for minorities[7], generates letters of recommendation for female job candidates[8], and more.

Other side-effects of unfiltered data ingestion include training data poisoning, a targeted AI attack that involves a malicious actor intentionally contaminating a training dataset to negatively affect AI performance or introduce (or intensify) bias. A model trained on toxic content - whether added by an attacker or scraped from web forums and other hotbeds of toxic online behavior - runs the risk of making harmful decisions or outputting offensive material.

These concerns over AI data privacy and ethics are driving a push to focus more attention on curating AI training data to improve quality and combat bias.

In a recent article on AI bias, UC Davis computer science professor Zubair Shafiq notes, “We have to do a better job of curating more representative data sets because we know there are downstream implications if we don’t.[9]

Data curation isn’t a new concept in the AI field, but the dataset sizes involved with newer, more sophisticated models like GPT-4 make traditional curation methods unfeasible. The AI product marketplace is rapidly responding, with vendors like Lightly and Cleanlab offering large-scale, AI-powered, automatic data curation solutions. AI teams can use these tools to identify outliers, mistakes, and other issues that could bias models or otherwise negatively affect quality.

3. Focusing on security behavior and culture programs (SBCPs) helps reduce employee-caused breaches

Human error has always been a leading cause of data breaches. Most organizations attempt to mitigate this risk with cybersecurity awareness training designed to ensure employees know what threats to look for and understand their roles in keeping data private.

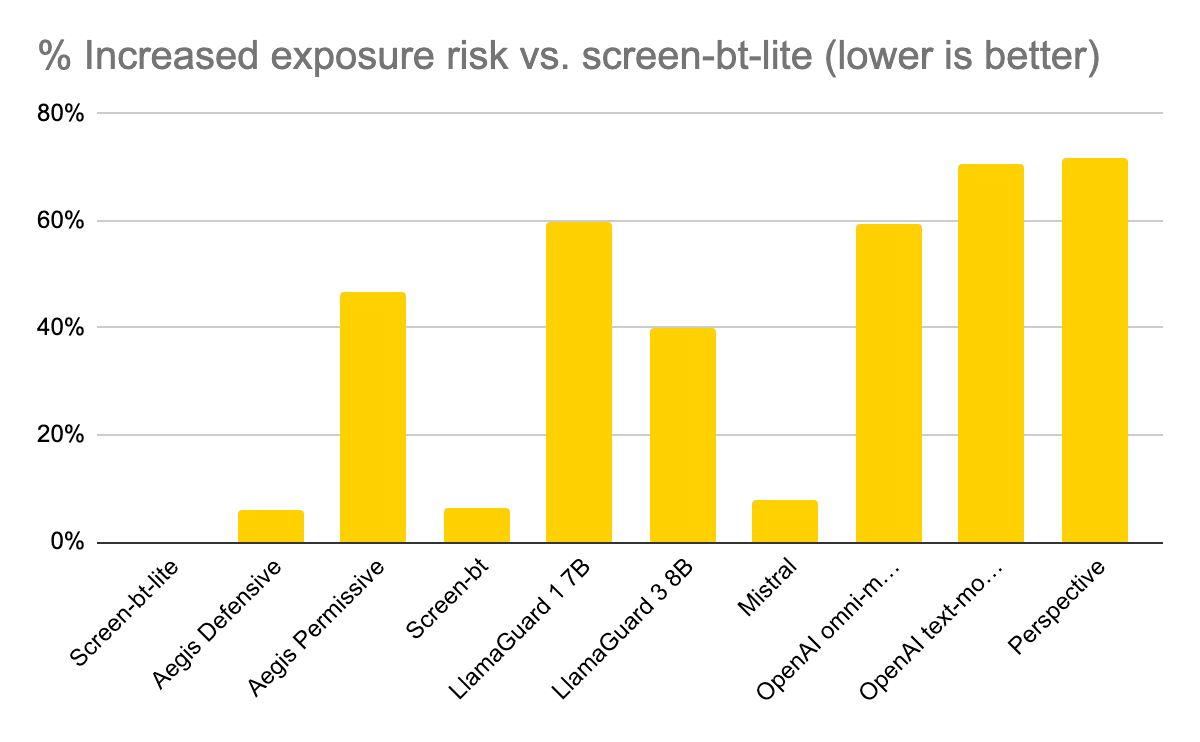

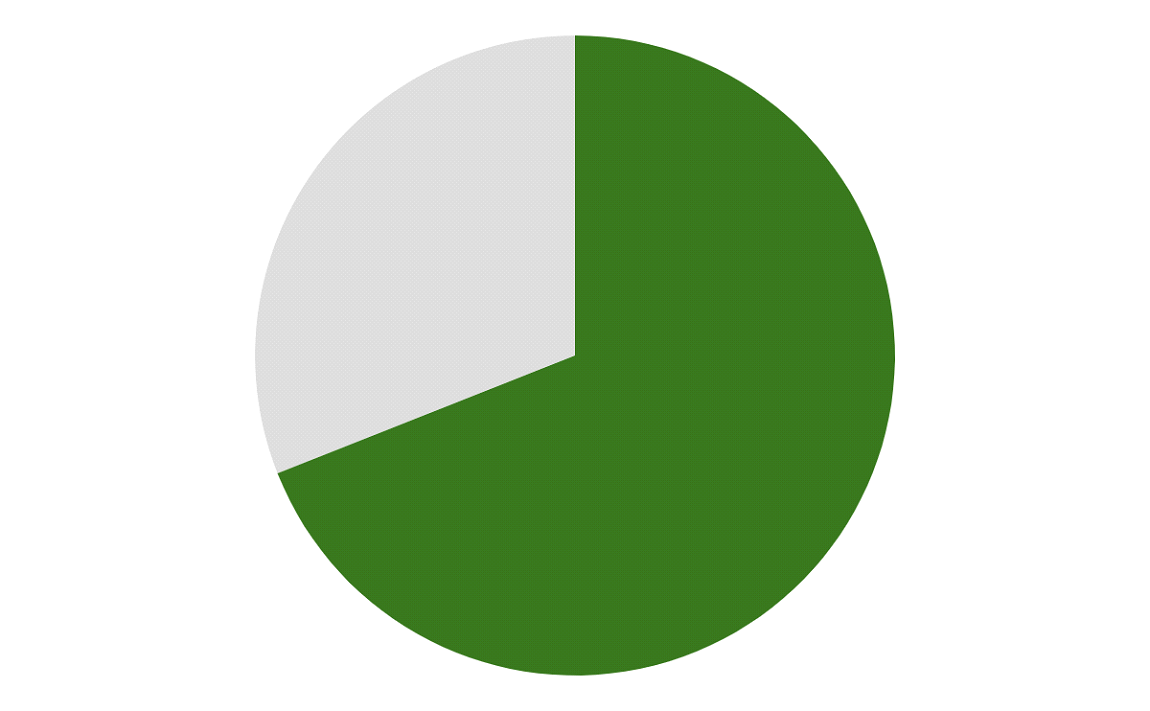

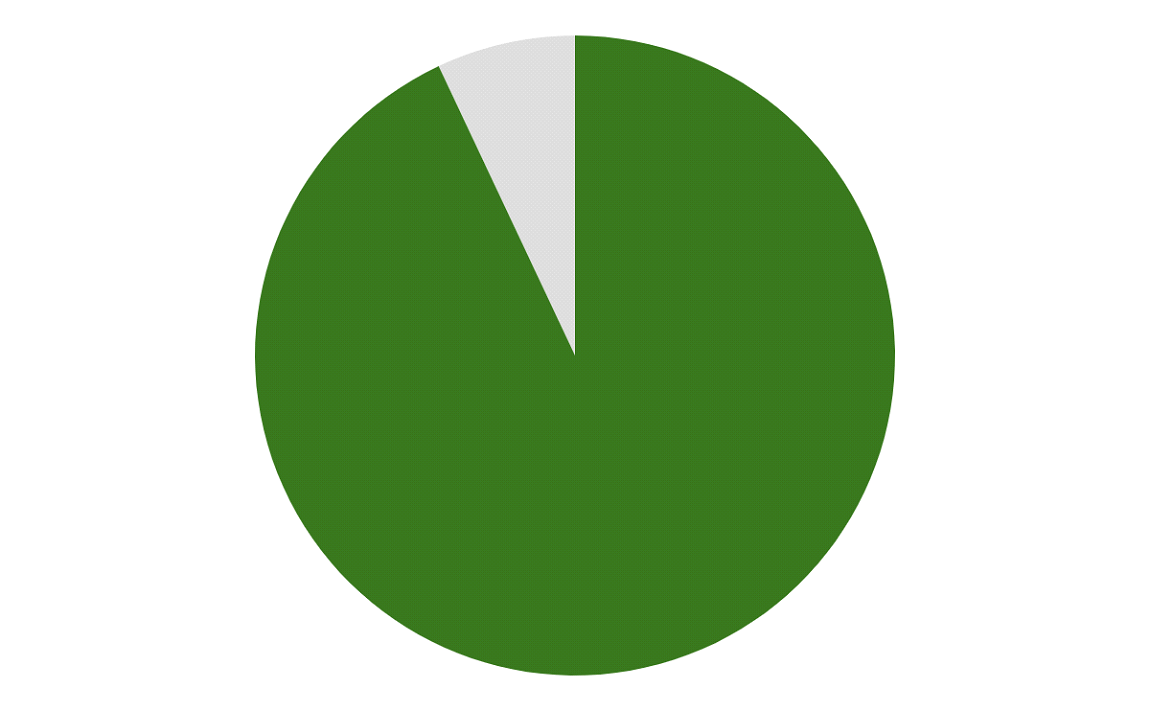

However, we believe, this strategy seems to have little effect. “The 2022 Gartner® Drivers of Secure Behavior Survey found that 69% of employees have bypassed their organization’s cybersecurity guidance” in the previous 12 months”, and “93% of the employees knew their actions would increase risk to their organization but undertook them anyway[10].” As noted in the charts below , an explosion in generative AI adoption and usage among non-technical staff exacerbates the challenge by providing another avenue for employees to expose sensitive information.

"69% of employees Admitted to deliberately bypassing organizational security controls in the previous 12 months.

"93% of employees Knew their actions would increase risk to their organization but undertook them anyway.”

Statistics sourced from Gartner[10]

Employees cite numerous reasons for ignoring or circumventing data privacy and security controls, like a company culture that prioritizes speed and profit over security, or what’s known as “security fatigue” – a feeling of exhaustion at having to use too many apps, passwords, and one-time codes to complete daily tasks. A Gartner report states that “Security behavior and culture programs (SBCPs) enterprisewide approach to minimizing cybersecurity incidents associated with employee behavior, whether inadvertent or deliberate[11].”

As the name suggests, a SBCP aims to change employee behavior and the company culture. It combines traditional security training with organizational policy changes, and software development practices like change management, human-centered user experience (UX) design, and DevSecOps.

To streamline SBCP implementation, Gartner recommends that companies “Focus SBCP efforts on the riskiest employee behaviors by regularly reviewing a defensible sample of past cybersecurity incidents to determine the volume and type of cybersecurity incidents associated with unsecure employee behavior[12].”

4. Generative AI introduces new attack surfaces, driving changes to data security and privacy practices

The rapid advancement of large language models (LLMs) and other generative AI technologies and their widespread adoption by businesses and end users are among the biggest, most disruptive influences in the tech industry today. As high-profile incidents like the ChatGPT data leak[13] illustrate, AI’s insatiable appetite for data - and end-users’ propensity to reveal sensitive information in LLM prompts - pose huge data privacy challenges

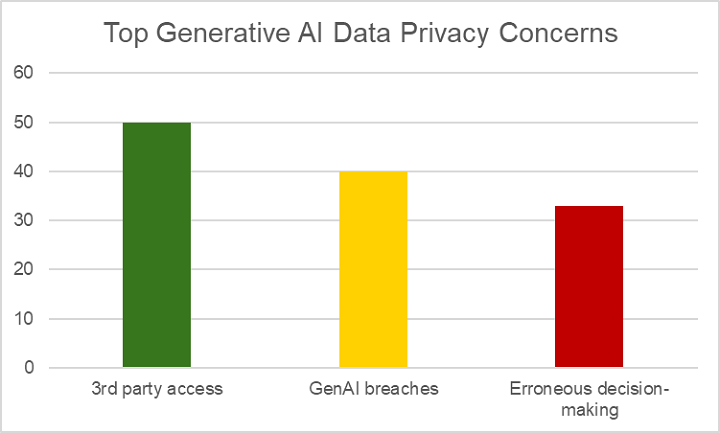

According to Cisco’s 2024 Data Privacy Benchmark Study, 48% of respondents admitted entering confidential company information into genAI prompts, a major reason why 27% of companies have banned its use[14]. Similarly, Gartner Top Trends in Cybersecurity for 2024 report states that “the top three risk-related concerns about the usage of GenAI are:

- Access to sensitive data by third parties (a concern of nearly half the cybersecurity leaders who responded)

- GenAI application and data breaches (two-fifths of the responding cybersecurity leaders)

- Erroneous decision-making (more than one-third of the responding cybersecurity leaders)”[15]

Graph created by Granica based on Gartner research.

The need to defend new attack surfaces and prevent sensitive data exposure is driving changes to data privacy practices and cybersecurity controls. Some of the new, genAI-focused data privacy trends include:

- Using specialized security controls (like PII data discovery/masking or AI firewalls) capable of protecting attack surfaces at runtime, e.g., as LLM prompts are entered.

- Using the AI trust, risk, and security management (TRiSM) framework[16] to protect AI applications, prompts, and orchestration layers.

- Performing frequent data security risk assessments, including resilience-driven third-party cybersecurity risk management (TPCRM).

Update your data privacy strategy with Granica

Granica Screen is a data privacy service that protects sensitive data in cloud data lakes for use in model training and in LLM prompts/outputs at inference time. Screen offers real-time PII data discovery and masking to protect LLM prompts, before the prompts are passed to LLMs, with extremely high accuracy. The lightweight, resource-efficient application runs inside the customer’s cloud to ensure sensitive data never leaves the environment, reducing security risks and ensuring total privacy. Granica Screen enables companies to take advantage of AI-focused data privacy trends cost-effectively and without affecting AI model performance or business outcomes

Granica Screen delivers real-time data discovery and masking for generative AI training data and prompts. Request a demo today.

Sources:

- https://hiddenlayer.com/threatreport2024/

- https://www.reuters.com/technology/scarlett-johanssons-openai-feud-rekindles-hollywood-fear-artificial-intelligence-2024-05-23/

- https://www.scientificamerican.com/article/tougher-ai-policies-could-protect-taylor-swift-and-everyone-else-from-deepfakes/

- https://www.cnn.com/2024/02/04/asia/deepfake-cfo-scam-hong-kong-intl-hnk/index.html

- https://onfido.com/blog/deepfake-law/

- https://www.reuters.com/technology/cybersecurity/meta-overhauls-rules-deepfakes-other-altered-media-2024-04-05/

- https://minorityhealth.hhs.gov/news/shedding-light-healthcare-algorithmic-and-artificial-intelligence-bias

- https://arxiv.org/html/2310.09219v5

- https://www.universityofcalifornia.edu/news/three-fixes-ais-bias-problem

- Gartner, Top Trends in Cybersecurity for 2024, by Richard Addiscott, Jeremy D’Hoinne, Chiara Giardi, Pete Shoard, Paul Furtado, Tom Scholtz, Anson Chen, William Candrick, Felix Gaehtgens, 2 January 2024. GARTNER is a registered trademark and service mark of Gartner, Inc. and/or its affiliates in the U.S. and internationally and is used herein with permission. All rights reserved.

- Gartner, Top Trends in Cybersecurity for 2024, by Richard Addiscott, Jeremy D’Hoinne, Chiara Giardi, Pete Shoard, Paul Furtado, Tom Scholtz, Anson Chen, William Candrick, Felix Gaehtgens, 2 January 2024.

- Gartner, Top Trends in Cybersecurity for 2024, by Richard Addiscott, Jeremy D’Hoinne, Chiara Giardi, Pete Shoard, Paul Furtado, Tom Scholtz, Anson Chen, William Candrick, Felix Gaehtgens, 2 January 2024.

- https://www.spiceworks.com/tech/artificial-intelligence/news/chatgpt-leaks-sensitive-user-data-openai-suspects-hack/

- https://investor.cisco.com/news/news-details/2024/More-than-1-in-4-Organizations-Banned-Use-of-GenAI-Over-Privacy-and-Data-Security-Risks---New-Cisco-Study/default.aspx

- **Gartner, Top Trends in Cybersecurity for 2024, by Richard Addiscott, Jeremy D’Hoinne, Chiara Giardi, Pete Shoard, Paul Furtado, Tom Scholtz, Anson Chen, William Candrick, Felix Gaehtgens, 2 January 2024. **

- https://www.sciencedirect.com/science/article/abs/pii/S0957417423029445