Data security remains a top concern for business leaders across every industry as the rate of newsworthy data breaches – and the severity of their consequences – increases each year. AT&T is the latest corporation facing legal action after failing to protect the personal data of 73 million current and former customers in a recent cyberattack. Rapidly growing artificial intelligence technologies like large language models (LLMs) tend to expand a company’s attack surface, as reflected in a recent HiddenLayer study that reveals 77% of businesses experienced an AI breach in the last year.

Organizations face numerous challenges to data security, including an increase in sophisticated cyber threats like ransomware and social engineering, accidental exposure of data through generative AI prompts, and targeted attempts to compromise AI models. The table below summarizes some of the biggest challenges and pairs them with data security best practices and tools to help mitigate them. Click the links in the left column to read more about each data security challenge.

Data Security Challenges & Best Practices

| Challenge | Description | Solutions |

|---|---|---|

| IT Complexity | Highly complex digital architectures make it difficult to identify and protect all sensitive data | Data risk assessments PII data discovery Data observability |

| Ransomware | Malicious software spreads throughout a network, encrypting all the data it touches until companies pay a ransom | Secure data backups Isolated recovery environment Automated patch management |

| Compromised Accounts & Insider Threats | Attacks using legitimate account credentials are difficult to detect, track, or stop | Zero Trust Security Least-privilege RBAC User and Entity Behavior Analytics (UEBA) |

| Accidental Exposure | Employees and customers accidentally expose sensitive data through negligence or LLM prompts | Data loss prevention (DLP) Data encryption & key management Synthetic data Data masking |

| AI Attacks | Malicious actors specifically target AI models with inadequate security controls and massive datasets | AI firewall Model output validation AI data leak prevention |

Data security challenges

Four of the biggest data security challenges include:

IT complexity

Modern digital architectures are composed of thousands of moving parts and grow more complex over time as businesses adopt new applications, devices, and cloud services. It’s difficult enough to locate and protect sensitive data across all known apps and services, but the task increases in difficulty with the addition of shadow IT, a common scenario in which individual teams purchase and implement new technologies without IT approval. Even the best security analysts can’t protect what they don’t know about, so gaining full visibility of all services and data an organization uses is crucial to data security.

Solutions (click the links to learn more):

Ransomware

Ransomware, malicious software that encrypts all the files on a system and holds them hostage for a ransom payment, poses an especially pervasive and disruptive data security threat. It often exploits vulnerabilities in outdated software, hardware, or services using code that’s undetectable by endpoint and firewall security solutions to breach the network. It then quickly proliferates and encrypts everything in its path - including data backups - bringing entire companies offline in a matter of hours.

Solutions:

Compromised accounts & insider threats

Data breaches occur so frequently that it’s a best practice to assume the organization has already been infiltrated. Outside attackers use a variety of methods to compromise accounts for access to sensitive resources, from brute-force attacks that guess user name/password combinations to social engineering tactics like email phishing that trick employees into revealing authentication details. Insider threats are even more insidious because they often involve disgruntled (or financially incentivized) employees who leak or steal data intentionally. In both cases, attackers use legitimate credentials to access resources, so traditional security solutions like antivirus and firewalls won’t detect them. Such actors could remain on the network for months - or even years - while continuously exfiltrating sensitive data.

Solutions:

Accidental exposure

Human error and negligence are the leading causes of data breaches, from misconfigured cloud security settings to poor password hygiene (e.g., writing passwords on sticky notes or saving them in an unencrypted desktop file). Often, employees who lack training handle sensitive or regulated data improperly, inadvertently exposing PII or other confidential information. Companies using generative AI and other LLM technologies also face data security challenges from users and applications, including sensitive information in generative AI/LLM inputs, which increases the risk of data exposure by an attacker or the AI model itself.

Solutions:

AI attacks

The highly complex nature of AI technology, combined with the enormous datasets they use for training and operation, make them attractive targets for cybercriminals. Hackers use a variety of methods to expose sensitive data in AI models, including inference attacks, data linkage, and prompt injections. These AI attacks typically employ specific kinds of prompts to manipulate an AI into exposing sensitive information. Because the technology is so new, AI attacks are difficult to prevent and detect, and many organizations lack the AI-specific security solutions required to defend their models.

Solutions:

Data security best practices & tools

The following data security best practices and tools can help limit the attack surface and mitigate threats.

Perform data risk assessments to understand the attack surface

Ultimately, any data security strategy will fail if an organization doesn’t fully comprehend all data assets and their potential risks. A data risk assessment involves comprehensively evaluating the entire digital architecture to identify where all the data is, its relative sensitivity and/or value, and the risks associated with storing, processing, and sharing it. This process includes:

- Discovering and classifying all data in use across the organization according to sensitivity or value

- Evaluating the current security policies and controls protecting sensitive data to find any vulnerabilities

- Assessing the financial, reputational, and regulatory impact of various threats to prioritize remediation efforts

A data risk assessment is a crucial first step to improving an organization’s data security posture because it ensures IT teams start with full knowledge of all data and vulnerabilities across the architecture. From there, teams can begin implementing the best practices and tools required to protect that data.

Use PII data discovery and masking tools to identify & remove sensitive info

Identifying all the sensitive information an organization stores or processes is crucial to assessing risk and preventing exposure. PII data discovery and masking tools use high-accuracy named entity recognition (NER) to discover identifying, and even psuedo-identifying, information within data files, such as phone numbers, addresses, and dates of birth. These tools help teams locate all the sensitive and regulated data that needs protection through some form of data masking. Data masking anonymizes and de-identifies sensitive information, allowing the files themselves to be used while mitigating the risk of PII exposure.

Some PII data discovery tools, like Granica Screen, can not only mask sensitive information but also replace it with synthetic data. Synthetic data looks realistic but doesn’t contain any actual PII - an example would be replacing a valid phone number with 123-456-7891. Synthetic data allows organizations to train their generative AI models and increase accuracy through additional context without the risk of exposing any real PII in training data or inputs.

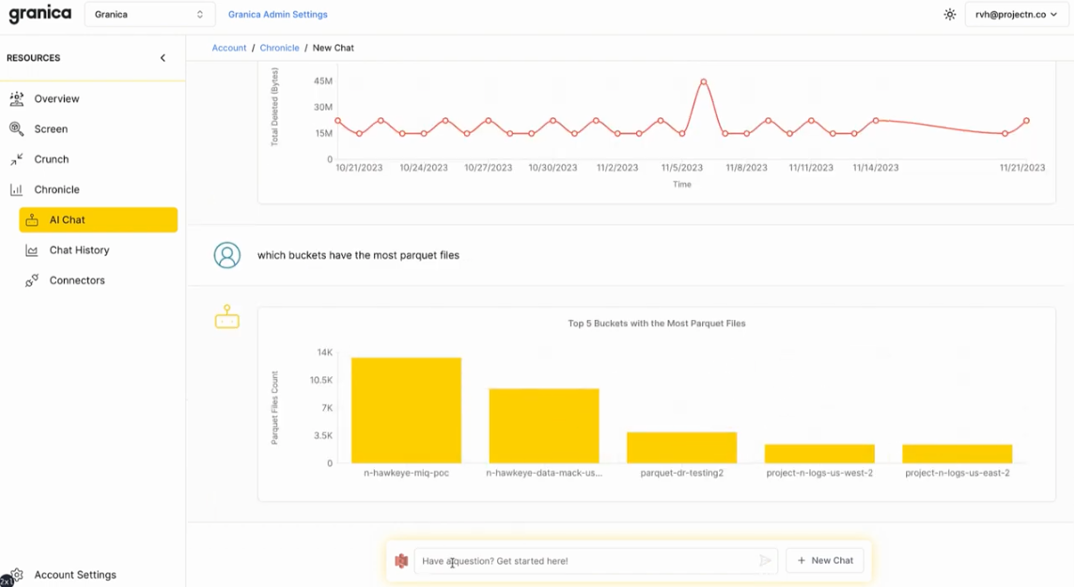

See who & what is accessing files with data observability tools

Visibility into who or what accesses sensitive data is core to many data privacy regulations, including HIPAA and the CCPA in the US and the GDPR in the EU. Data observability tools give IT teams real-time visibility into sensitive data usage across applications, systems, and AI models to aid in compliance and breach detection. The key is to use a solution like Granica Chronicle AI that only looks at file metadata without requiring full access to file contents, mitigating the risk of data exposure if a vendor is breached.

Ensure data survivability with secure backups

The key to quickly recovering from ransomware and minimizing the disruption caused by breaches and failures is using a secure backup solution like Veeam to ensure data survivability. These solutions have advanced capabilities like malware scanning to prevent ransomware infections. Companies should store multiple copies of business-critical data offsite and, preferably, on an isolated network segment with extremely limited access.

Organizations must keep backup systems and software updated with the latest security patches to prevent attackers from exploiting any known vulnerabilities. Data must also be encrypted - both as it’s transferring to the backup solution and while at rest. Finally, it’s a best practice to make backup data immutable, which means it can’t be changed once it’s in the backup location, as this will prevent ransomware from modifying or encrypting any of the files.

Prevent ransomware reinfection with an isolated recovery environment

Until ransomware is completely removed from the network, there’s a high likelihood that newly rebuilt systems and recovered data will get re-encrypted, starting the whole cycle over again and extending the business downtime. An isolated recovery environment is a dedicated network that contains all the hardware and software required to rebuild systems and applications, restore data, and run security tests before deploying anything to production. It must be completely inaccessible from the main network to prevent compromised accounts and/or ransomware from jumping from one to the other.

Mitigate vulnerabilities with automated patch management

Unpatched vulnerabilities in out-of-date software are a leading cause of data breaches, just like the MOVEit exploitation responsible for what was arguably the biggest hack of 2023. Managing updates for all the applications and systems in use across an organization is extraordinarily challenging, but a centralized, automated patch management solution can help. These tools monitor software and firmware versions, check for vendor-issued updates, and schedule deployments to ensure nothing gets missed. They also provide a central hub where IT teams can view versioning and patch information for the entire enterprise architecture from a single pane of glass.

Limit sensitive data exposure with Zero Trust Security

Zero Trust Security is a cybersecurity approach that assumes a network is already breached and that any account could be compromised. This methodology involves segmenting digital resources by location (e.g., the cloud vs. on-premises), sensitivity, and access requirements, then building individual security perimeters around each segment according to its needs. These perimeters include highly specific, least-privilege access policies (see below) and targeted security controls that require users to prove their identity each time they request access.

The goal of Zero Trust Security is to halt the lateral movement of a compromised account on the network and limit the scope of an attack. A hacker with a stolen username and password may be able to log into the network, but Zero Trust controls like multi-factor authentication will prevent them from accessing the most sensitive data in protected segments. Zero Trust also increases the likelihood that compromised accounts will be detected and locked while trying to access restricted resources.

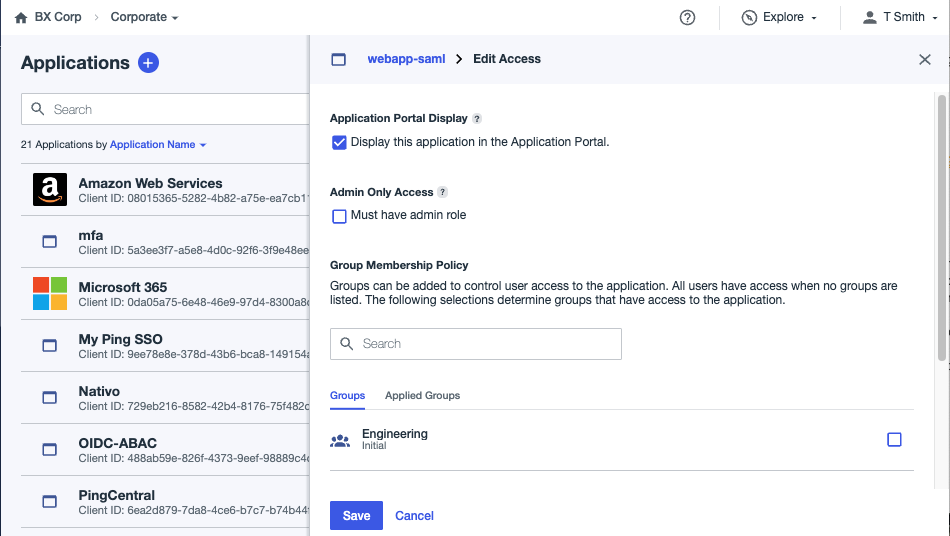

Restrict data access with least-privilege RBAC

“Least privilege” is a concept related to Zero Trust that helps prevent unauthorized data access and exfiltration. In it, admins provision user accounts with the least amount of access privileges required to complete their daily workflows. Least privilege is often used in conjunction with role-based access control (RBAC), which assigns privileges based on an account user’s role within an organization rather than assigning them individually.

For example, a customer service manager may use a “CS” account to access emails and customer files, and a separate “supervisor” account to handle HR-related tasks like timecard approvals, rather than using an individual account to do everything. Each role gets a specific set of privileges according to its needs and has little-to-no crossover, limiting the damage that a single compromised account could inflict.

RBAC is part of a comprehensive identity and access management (IAM) strategy. An IAM solution (such as PingOne) consolidates many identity, access, and authentication capabilities in one place, providing holistic oversight and simplified administration.

Detect anomalous activity with user and entity behavior analytics (UEBA)

Traditional security monitoring solutions have a blind spot when it comes to detecting threats using valid, authenticated accounts to access sensitive data. User and entity behavior analytics (UEBA) uses AI and machine learning to monitor account and device activity, establishing baselines for acceptable behavior and detecting anomalies that could indicate a threat. For example, UEBA may notice a user suddenly transferring gigabytes of data to external media and lock their account until an IT administrator verifies the activity is valid and not the result of a compromise or internal threat.

Improve data handling practices with cybersecurity training

Since human error and negligence cause so many data breaches, it’s crucial to train staff to handle sensitive information safely and compliantly. At a minimum, all employees should receive basic cybersecurity training on proper password practices, how to use email and download files safely, and how to avoid phishing and other social engineering attacks.

Anyone working with sensitive data needs additional training on keeping it private and maintaining compliance with any relevant regulations. And, since everyone makes mistakes, it’s also crucial that employees know how to report any potential security issues and feel comfortable doing so without fearing retaliation. If an organization uses LLMs at any level, it’s important to create a generative AI policy that meets regulatory compliance requirements and then train all employees on what information they can and can’t include in prompts.

Use data loss prevention (DLP) to stop data leaks

Data loss prevention is a set of tools and practices for detecting and preventing sensitive data leakage. DLP starts with a comprehensive policy defining how data can be used and shared across the organization, which should align with regulatory compliance requirements. Then, organizations can use software like Forcepoint DLP to monitor sensitive data, identify policy violations, and support remediation with automated alerts and protective actions to prevent intentional or unintentional data leaks.

While DLP has long been considered a data security best practice, and the solution marketplace is mature, many organizations find traditional DLP tools and strategies difficult to implement. DLP solutions are also reactive, which means they don’t proactively prevent data leaks, they just halt those already in progress. As a result, some companies are shifting away from DLP as a core strategy and focusing more on preventative data protection measures like encryption, key management, and data masking.

Proactively secure data with encryption & key management

Encrypting data both at rest and in transit ensures that even leaked or stolen data will be unusable, mitigating privacy concerns. However, many organizations struggle to efficiently manage cryptographic keys, resulting in insecure practices like reusing keys, forgetting to destroy expired keys, or not encrypting keys stored in server memory. A secure encryption key management solution helps streamline and automate tasks like strong key generation, automatic key rotation, and secure key destruction. Encryption and key management (along with data masking and synthetic data) help prevent sensitive information from being exposed, even if leaked files make it past DLP’s detection algorithm.

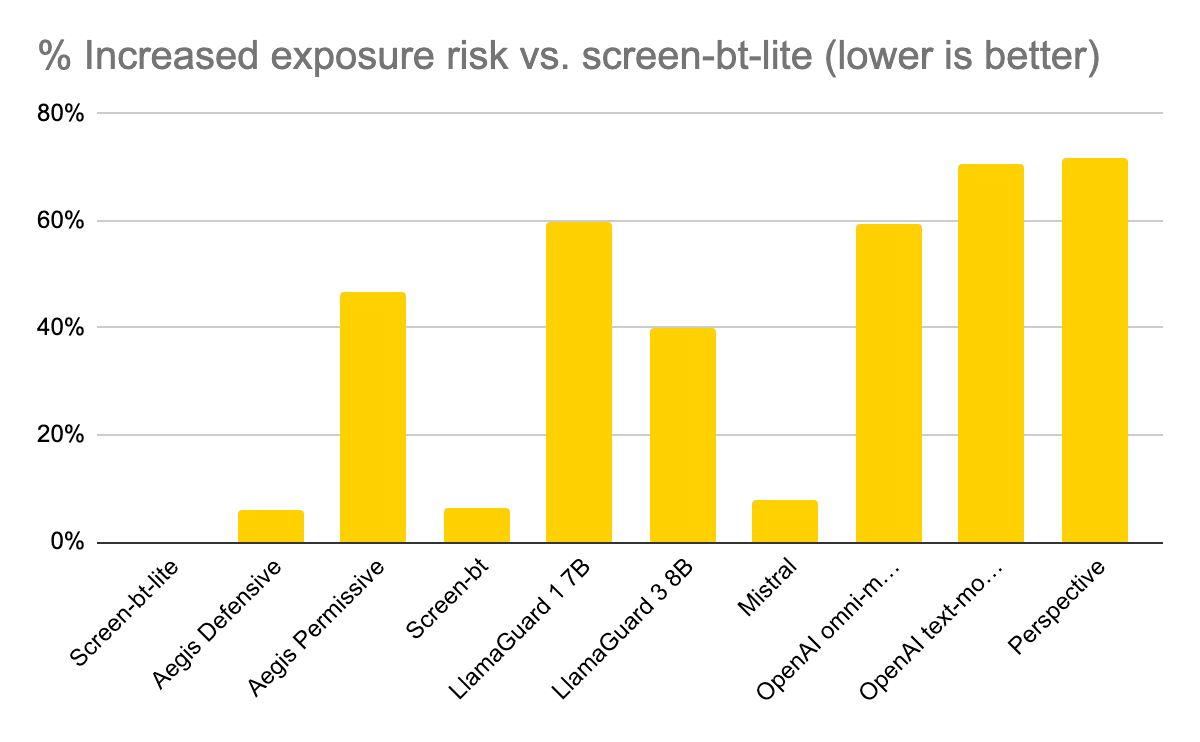

Protect AI models with an AI firewall

LLMs ingest vast quantities of prompt inputs that may conceal attempts to manipulate model behavior or expose sensitive information. An AI firewall inspects every input for potentially malicious payloads and blocks them before ingestion. Some solutions also detect model security vulnerabilities, use rate-limiting policies to prevent Denial-of-Service (DoS) attacks, and validate model outputs in real-time (see below). These features help prevent generative AI tools from exposing any sensitive information the other tools and policies missed.

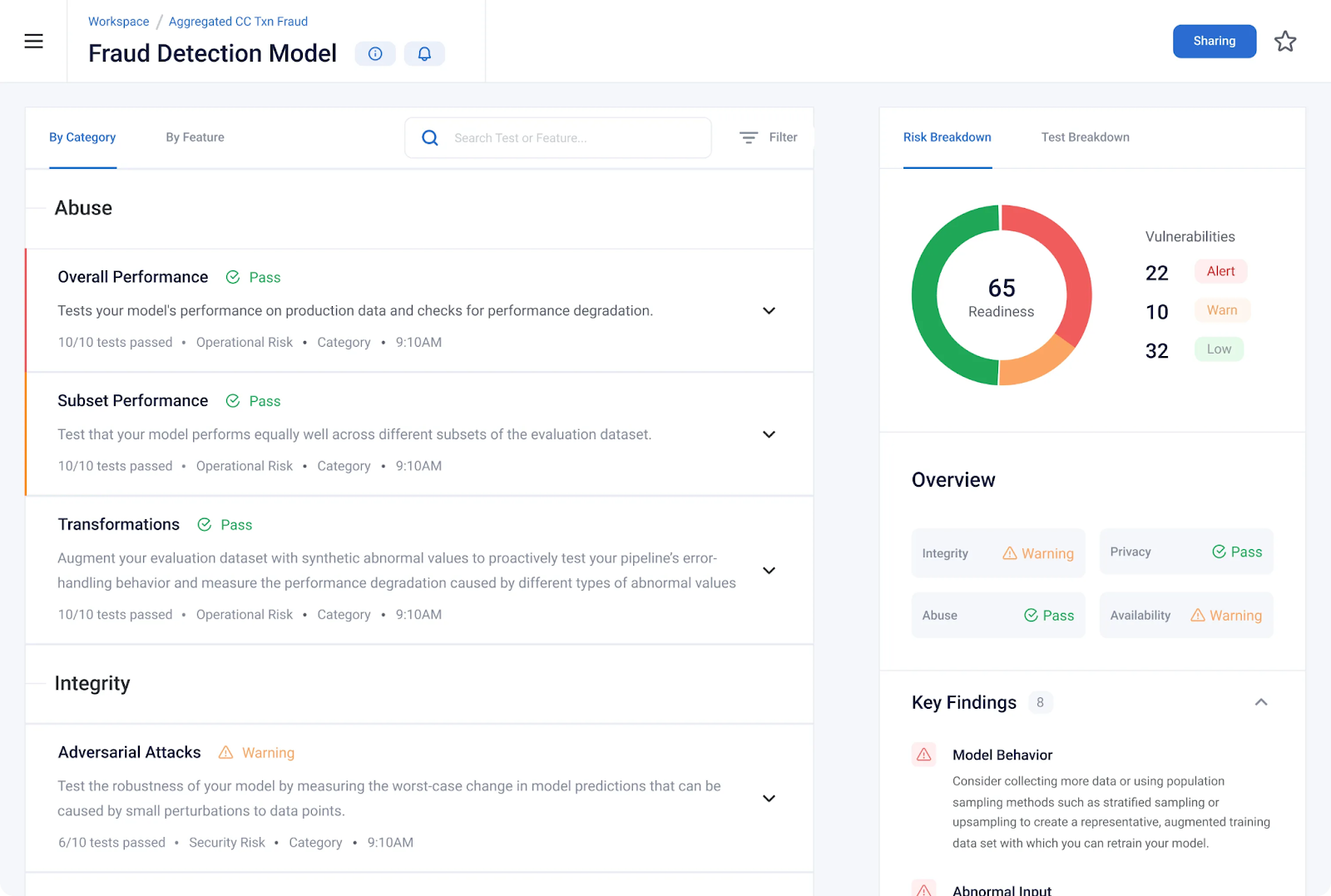

Continuously validate model outputs for undesired responses

Even with clearly defined LLM prompt policies and a state-of-the-art AI firewall, it’s still possible for models to generate undesired outputs. An AI validation tool like Robust Intelligence continuously monitors model outputs to detect PII, hallucinations, and other sensitive or harmful content. These solutions automatically notify engineers with analyzes of potential causes, allowing teams to remediate problems as quickly as possible to prevent additional data exposure.

Defend AI datasets with AI data leak prevention

AI data leak prevention tools work similarly to traditional DLP solutions, but they’re optimized to stop data loss in LLMs and other generative AI applications. These tools typically include policies that comply with the EU’s new AI Act, which is the first data privacy regulation specifically targeting AI. They also look for AI-specific data exfiltration techniques like prompt injections and training data poisonings that older data security tools miss. AI data leak prevention and other AI data privacy tools (like the PII identification, data masking, and synthetic data solutions mentioned above) help organizations stay compliant and secure while innovating with the latest artificial intelligence technology.

Keep data secure without sacrificing AI outcomes

Granica Screen is a data privacy service offering PII and sensitive data discovery, masking, and synthetic data generation to safely unlock data for use with LLMs and other AI models. Screen delivers state-of-the-art accuracy across 100+ languages and 25+ regions to improve privacy and compliance globally. Granica’s unified platform offers real-time prompt protection, cloud data lake training file scanning, and data observability via the Chronicle AI solution. Plus, Granica’s platform deploys inside your cloud environment, further protecting data security by ensuring sensitive information never leaves your environment.

Request a free demo to learn more about achieving data security best practices for your AI models with Granica.