Data security remains a major concern for organizations across every industry, with Apple reporting a 20% increase in data breaches from 2022 to September 2023. At the same time, IT teams find protecting sensitive data more challenging than ever, thanks to the growing frequency and sophistication of threat methods, negligent data handling practices, the adoption of AI technology, and a lack of visibility into data environments. This post discusses these data security challenges in greater detail and provides resources for overcoming them.

4 Data security challenges and solutions

| Data Security Challenges | Solutions |

|---|---|

| Ransomware and other sophisticated threats | Cybersecurity training and the zero-trust security model |

| Human error and negligence | Data security platforms (DSPs) and IT automation |

| AI data leaks and attacks | PII data screening, AI firewalls, and data access governance |

| IT complexity / “shadow IT” | Data discovery and access/utilization observability solutions |

Ransomware and other sophisticated threats

Cybercriminals use a variety of sophisticated methods to breach networks. According to IBM’s 2023 Cost of a Data Breach Report, phishing and other social engineering tactics caused 24% of last year’s data breaches. Once a malicious actor compromises an account, they can access systems, exfiltrate data, and deploy ransomware to extort payment in exchange for file decryption or a promise not to release sensitive information.

Anti-phishing software can help identify phishing content in emails and websites, but ultimately, the only way to prevent social engineering is with cybersecurity training. All personnel must be educated (with regular refreshers) on how to spot and avoid social engineering tactics.

Additionally, adopting the zero trust security model can help limit the scope of a breach and prevent ransomware and other malicious software (a.k.a., malware) from proliferating on the network. Zero trust requires multiple layers of authentication and identity verification, which makes it harder for unauthorized users to access sensitive resources, even with a stolen username and password.

- To learn more, read Data Security Strategy: Key Considerations

Human error and negligence

Mistakes or negligent data handling processes, cloud configurations, and systems administration caused approximately 23% of data breaches in 2023. For example, end-users may accidentally email sensitive information to the wrong person, misplace portable media containing confidential files, or let an unauthorized family member use their company-issued device.

On the administrative side, managing access privileges across hundreds or thousands of individual hardware, software, and cloud solutions is tedious for IT teams who are often stretched too thin already, resulting in poor practices like “allow all” permissions and otherwise over-provisioned accounts. Teams may also struggle to properly configure other security settings or stay updated on security patches, potentially leaving coverage gaps for malicious actors to slip through.

Data security platforms (DSPs) automatically detect and prevent sensitive data leakage by monitoring data sources, email clients, and devices and providing alerts and protective actions. DSPs acts as a safety net when human error occurs and can also stop intentional data exfiltration by malicious actors. Other automation tools can help with labor-intensive sysadmin tasks that are prone to mistakes, such as new device provisioning, patch management, and cloud configuration management.

AI data leaks and attacks

AI adoption continues to rise, with as many as 42% of enterprise companies reporting active use of artificial intelligence in some capacity. However, this technology is so new and complex that many organizations lack a complete understanding of how to properly configure, use, or secure their AI solutions.

In addition, AI ingests huge datasets for training and operation, including LLM prompts, which often contain personally identifiable information (PII) and other sensitive data that are attractive targets for cybercriminals. AI hackers use a variety of techniques to compromise models, including:

- Inference – probing an AI model for PII-adjacent information and inferring how to fill in the blanks.

- Data linkage – combining information from AI outputs with other online data to re-identify individuals in anonymized datasets.

- Prompt injection – inserting malicious content into LLM prompts to manipulate model behavior or expose sensitive data.

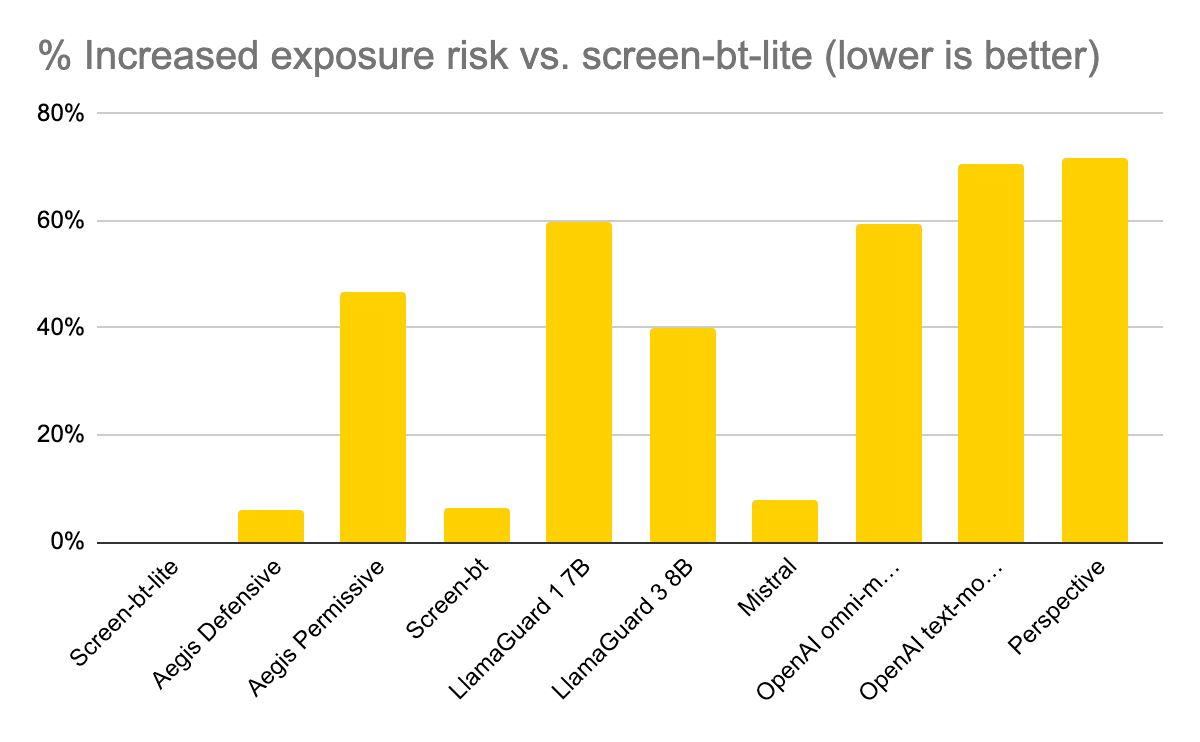

PII data discovery tools automatically locate personal information in AI datasets so it can be removed or replaced with realistic synthetic data. They also monitor prompt inputs to remove PII in real time and prompt outputs to detect PII data leakage. Other data privacy tools offer capabilities like AI firewall protection and data access governance.

- To learn more, read our PII Data Discovery Tools Comparison Guide

IT complexity / “Shadow IT”

Enterprise network architectures are highly complex, with thousands of moving parts spread across data centers, remote business sites, cloud platforms, and end-user homes. This complexity makes it challenging for IT teams to fully visualize the environment or easily locate and protect sensitive data. Plus, individual business units will often purchase their own software or cloud services without notifying IT or going through security onboarding, creating a scenario known as shadow IT.

Data discovery and observability tools help teams find and monitor data across the organization. For example, a PII discovery tool like Granica Screen detects sensitive information in structured, semi-structured, and unstructured cloud data lake storage. A data observability tool like Granica Chronicle AI provides insights into how specific users and applications access and utilize cloud data. These kinds of solutions improve an organization's data security posture by giving teams visibility into where the most sensitive information is and which users, applications, and AI technologies are accessing it.

Solving data security challenges with Granica

The Granica AI data platform delivers powerful, cost-effective data discovery and observability capabilities for training and prompt data with state-of-the-art accuracy and high compute efficiency. Granica protects LLMs and other AI technology from training to inference to reduce the risk of data breaches while enabling successful AI outcomes.

Sign up for a free demo to learn how the Granica platform can solve your biggest data security challenges.